Bing: Duplicate & Similar Pages Blur Signals & Weaken SEO & AI Visibility

Fabrice Canel and Krishna Madhavan from the Microsoft Bing team posted a useful blog post named Does Duplicate Content Hurt SEO and AI Search Visibility? The short answer is yes, and the long answer is also yes.

I like how Fabrice Canel put it on X, "Similar pages blur signals and weaken SEO and AI visibility." Yep, when you give search engines, like Bing, mixed and confusing signals, it is possible for that to cause issues with traditional and AI search. This is why John Mueller from Google keeps talking about consistency.

That being said, the Bing blog post goes into the AI aspect of all of this. It reads:

- AI search builds on the same signals that support traditional SEO, but adds additional layers, especially in satisfying intent. Many LLMs rely on data grounded in the Bing index or other search indexes, and they evaluate not only how content is indexed but how clearly each page satisfies the intent behind a query. When several pages repeat the same information, those intent signals become harder for AI systems to interpret, reducing the likelihood that the correct version will be selected or summarized.

- When multiple pages cover the same topic with similar wording, structure, and metadata, AI systems cannot easily determine which version aligns best with the user's intent. This reduces the chances that your preferred page will be chosen as a grounding source.

- LLMs group near-duplicate URLs into a single cluster and then choose one page to represent the set. If the differences between pages are minimal, the model may select a version that is outdated or not the one you intended to highlight.

- Campaign pages, audience segments, and localized versions can satisfy different intents, but only if those differences are meaningful. When variations reuse the same content, models have fewer signals to match each page with a unique user need.

- AI systems favor fresh, up-to-date content, but duplicates can slow how quickly changes are reflected. When crawlers revisit duplicate or low-value URLs instead of updated pages, new information may take longer to reach the systems that support AI summaries and comparisons. Clearer intent strengthens AI visibility by helping models understand which version to trust and surface.

It is really a good blog post, and goes into tons of details. And I believe this is all relevant for Google Search and Google AI responses as well.

So read the Does Duplicate Content Hurt SEO and AI Search Visibility blog post.

ð Santa may check his list twice, but duplicate web content is one place where less is more. Similar pages blur signals and weaken SEO and AI visibility. Give your site the gift of clarity in our latest Bing Webmaster Blog. https://t.co/TPrOQGywHJ #Bing #SEO #AIsearch pic.twitter.com/EXwx8cSFw0

'" Fabrice Canel (@facan) December 19, 2025

Update from John Mueller of Google:

Yes! I think it's even more the case nowadays. Mainstream search engines have practice dealing with the weird & wonky web, but there's more than just that, and you shouldn't get lazy just because search engines can figure out many kinds of sites / online presences.

— John Mueller (@johnmu.com) December 24, 2025 at 8:41 AM

Forum discussion at X.

lock the secrets of SEO and boost your online visibility?

lock the secrets of SEO and boost your online visibility? Take a Quick Break!

Take a Quick Break!

Link Building & Analysis

Link Building & Analysis Content Creation & Optimization

Content Creation & Optimization Analytics & Reporting

Analytics & Reporting Keyword Research

Keyword Research On-Page Optimization

On-Page Optimization Technical SEO

Technical SEO Local SEO

Local SEO Final Thoughts

Final Thoughts

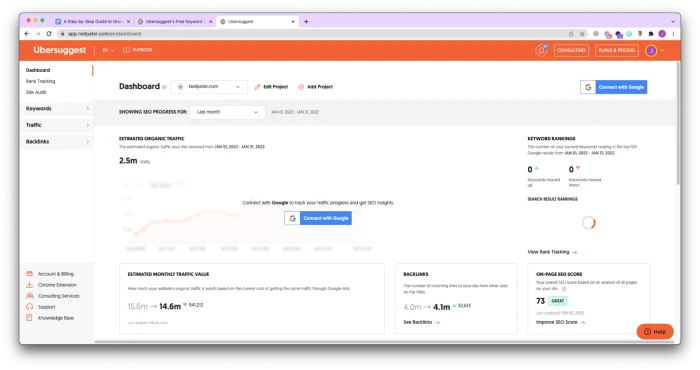

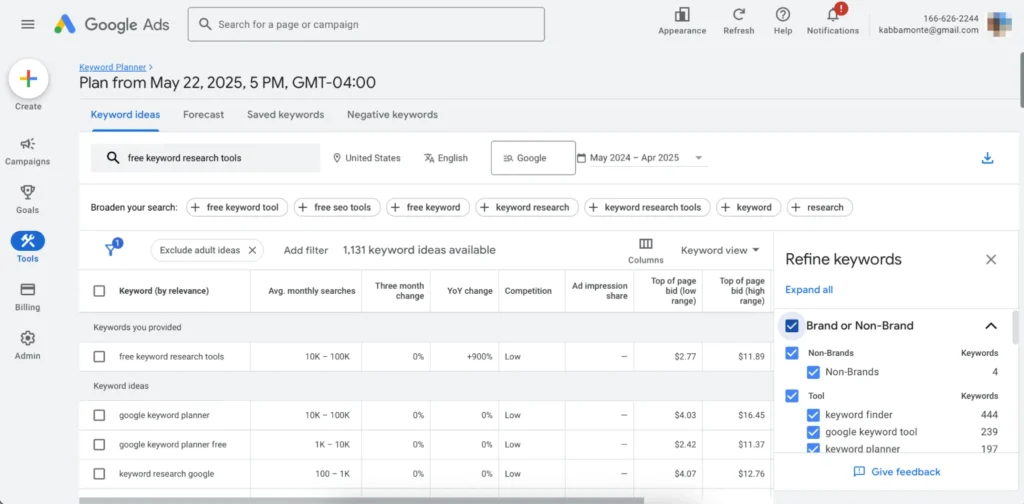

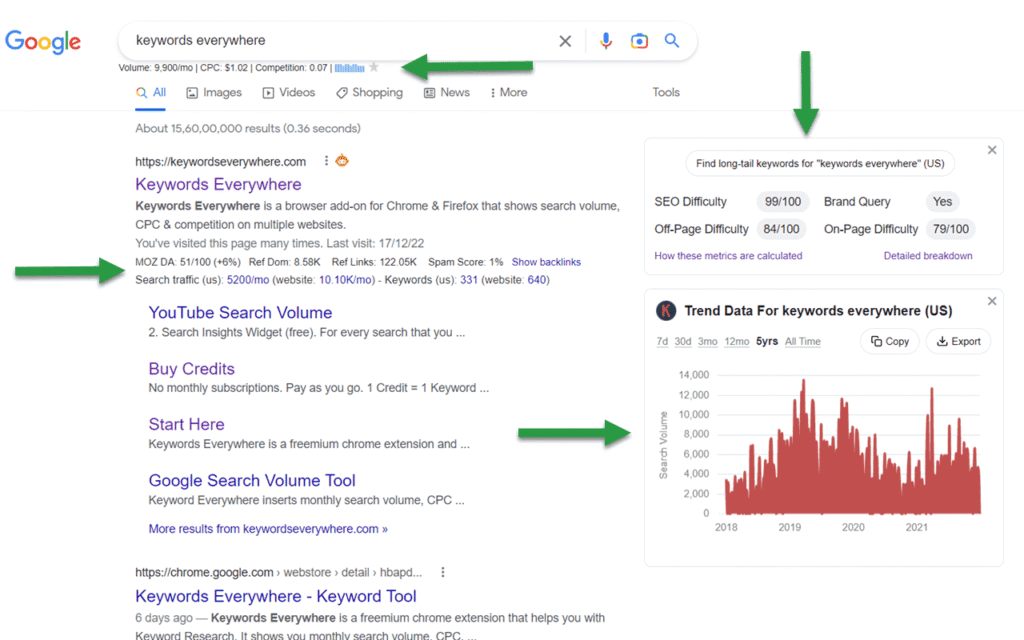

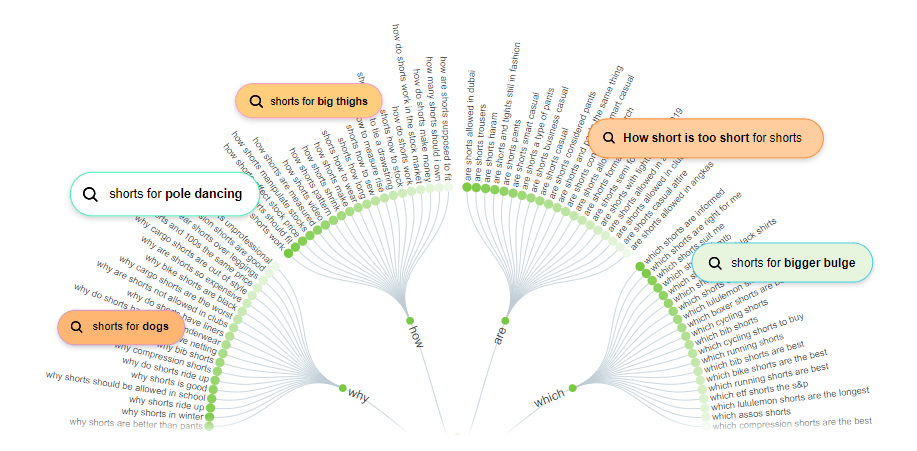

Keyword Research & Competitor Analysis.

Keyword Research & Competitor Analysis. : Make a list of 20–30 relevant keywords to target.

: Make a list of 20–30 relevant keywords to target. Technical

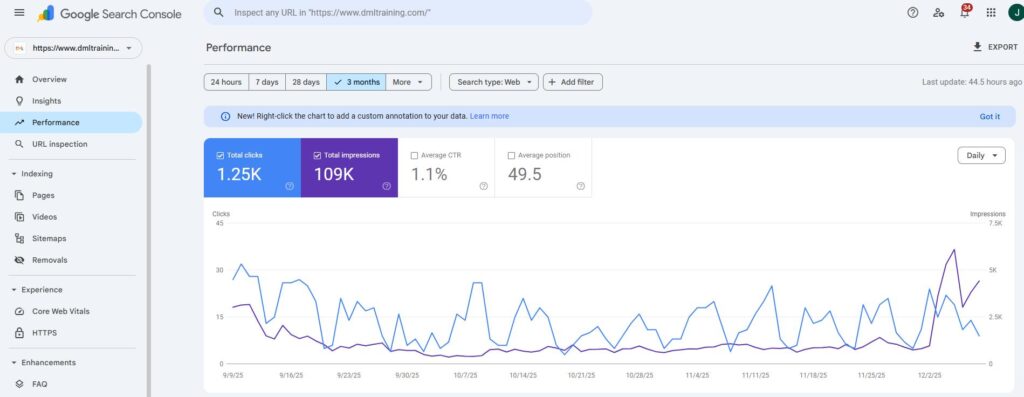

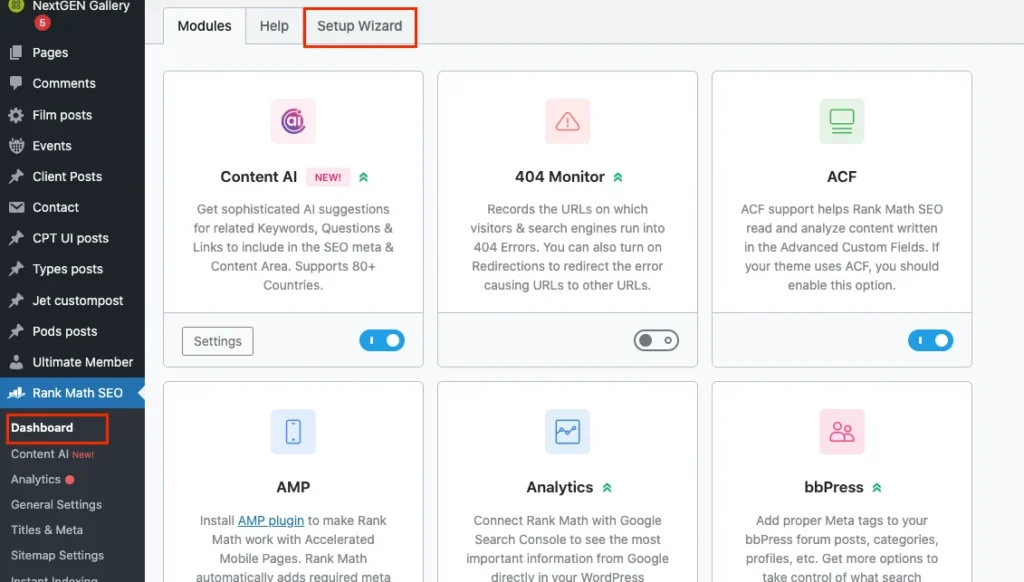

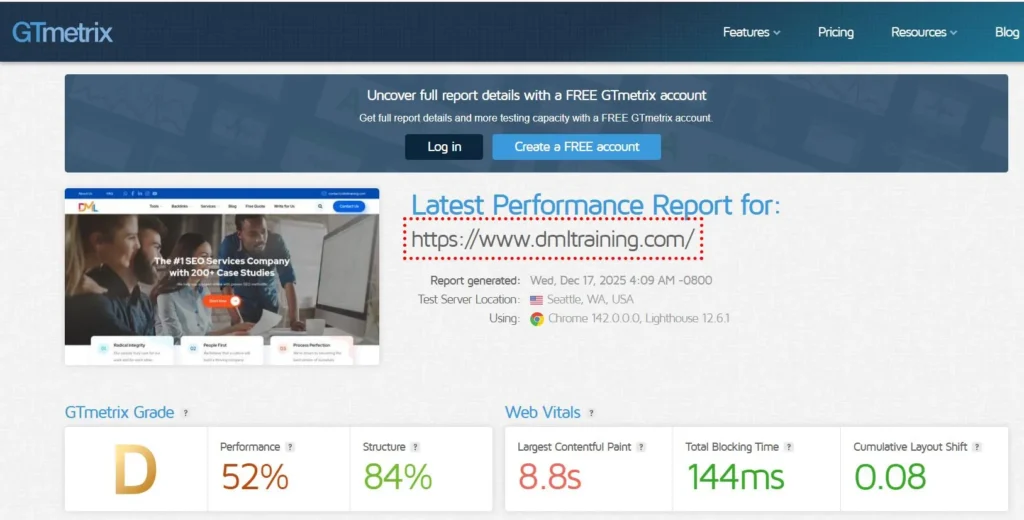

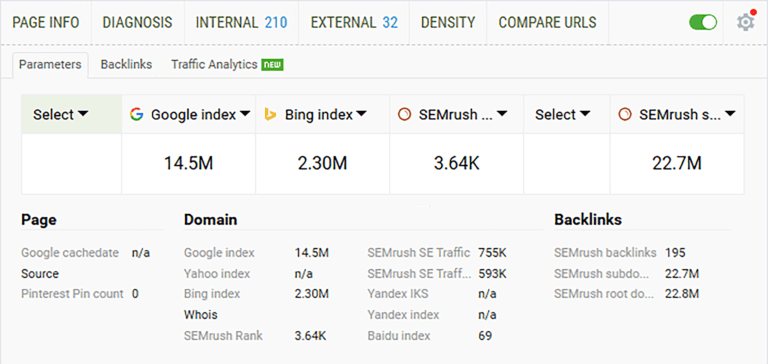

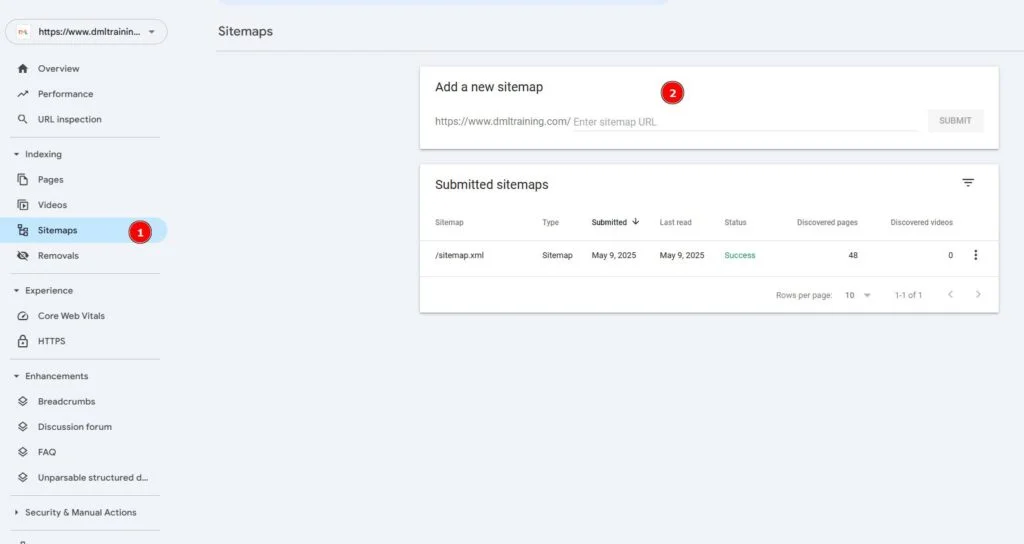

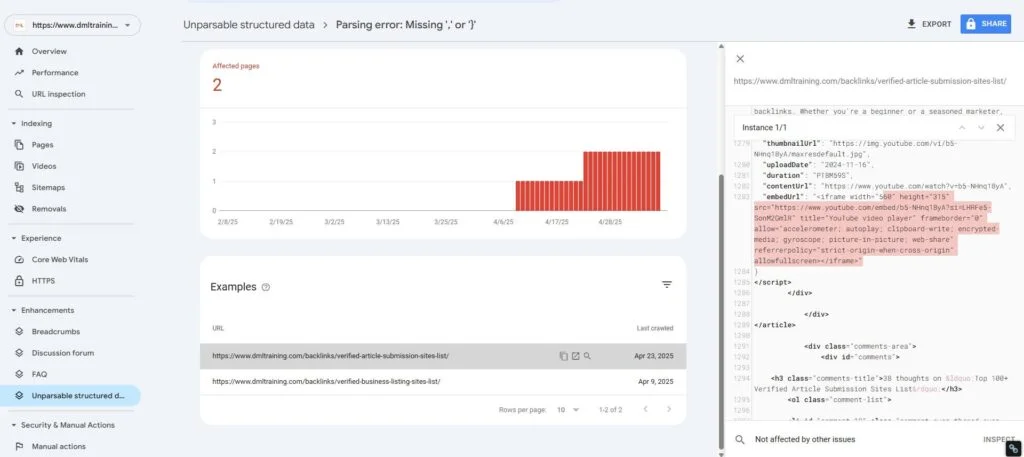

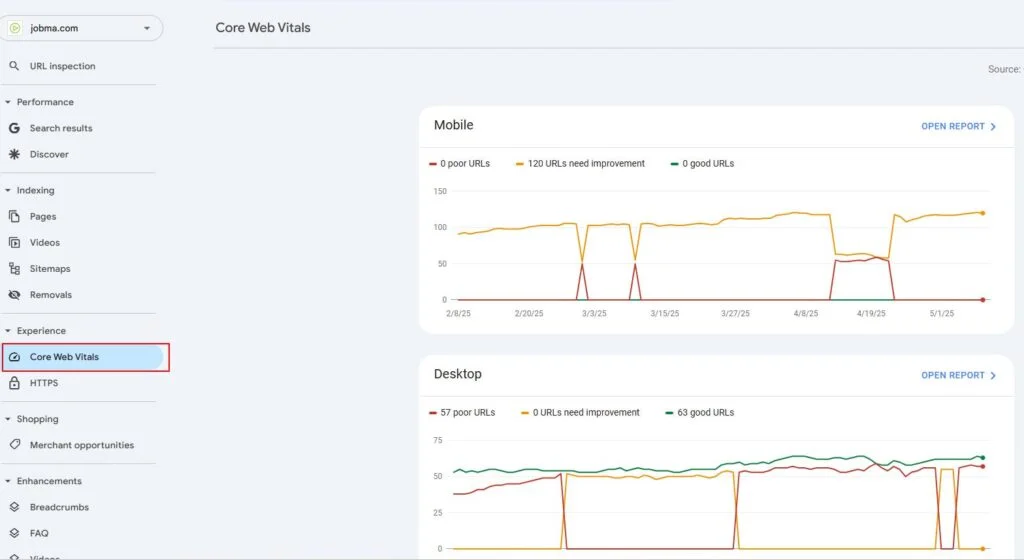

Technical  Use tools like Screaming Frog or Google Search Console to perform a quick audit of your website. Look for issues like broken links, slow loading times, or missing meta tags (title & description). Fix these issues to make sure that search engines can easily crawl and index your site.

Use tools like Screaming Frog or Google Search Console to perform a quick audit of your website. Look for issues like broken links, slow loading times, or missing meta tags (title & description). Fix these issues to make sure that search engines can easily crawl and index your site. SEO Content Strategy

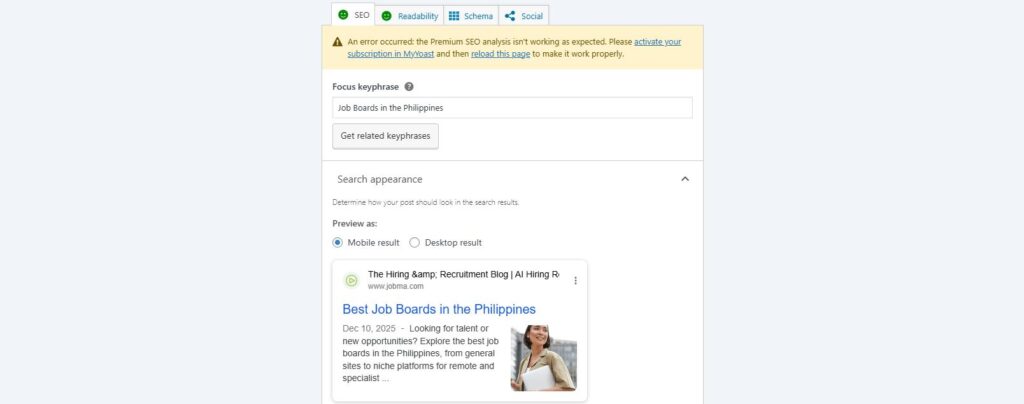

SEO Content Strategy On-Page Optimisation

On-Page Optimisation Focus on optimizing the key elements of your web pages. Don’t spread yourself too thin. This includes title tags, meta descriptions, headers (H1, H2, etc.), images, and internal linking. Make sure each page is optimized for the specific keyword you’re targeting + use secondary keywords.

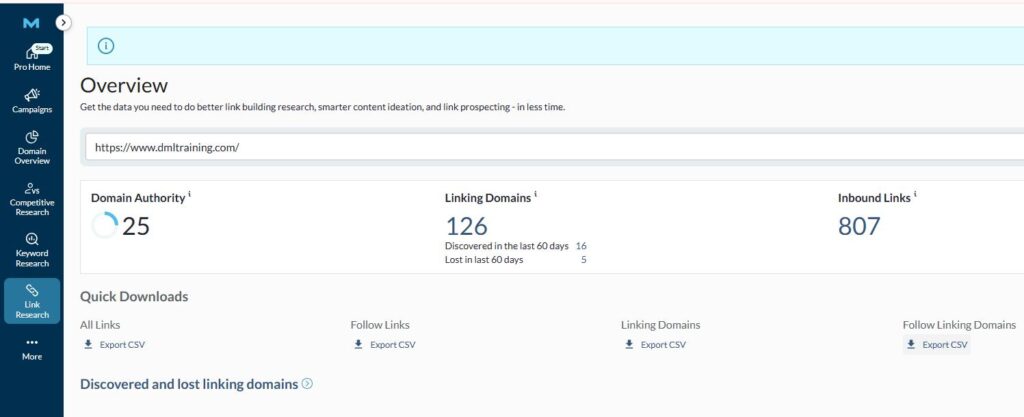

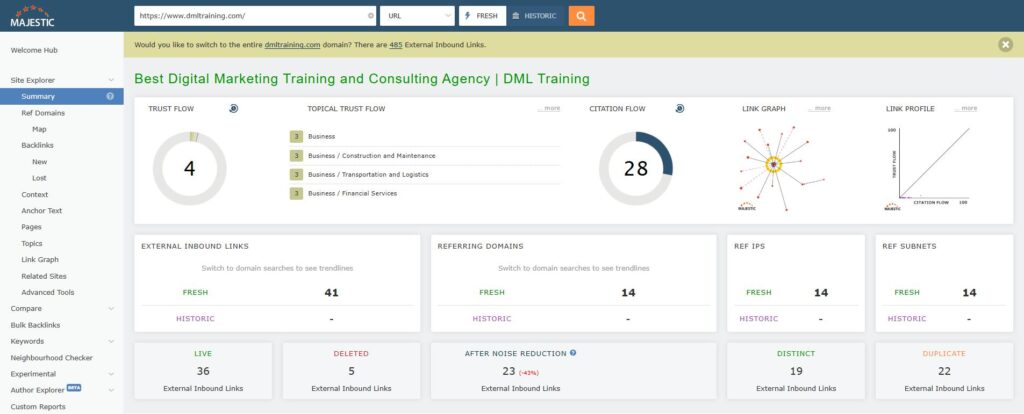

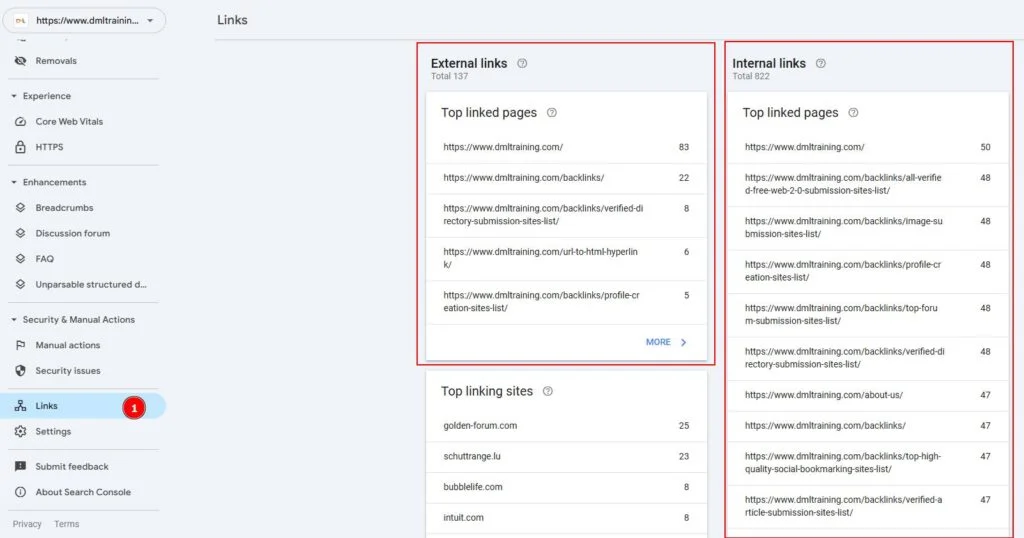

Focus on optimizing the key elements of your web pages. Don’t spread yourself too thin. This includes title tags, meta descriptions, headers (H1, H2, etc.), images, and internal linking. Make sure each page is optimized for the specific keyword you’re targeting + use secondary keywords. Backlink Strategy

Backlink Strategy Identify easy backlink opportunities, such as reclaiming unlinked brand mentions or submitting your site to relevant directories. Reach out to existing partners or use press releases or HARO (Help a Reporter Out) to gain high-quality backlinks.

Identify easy backlink opportunities, such as reclaiming unlinked brand mentions or submitting your site to relevant directories. Reach out to existing partners or use press releases or HARO (Help a Reporter Out) to gain high-quality backlinks. Local SEO and Social Media.

Local SEO and Social Media. If you have a local business, optimize your Google My Business listing and get listed in local directories. Use social media to share your content and build a community. SEO-wise, engaging with your audience on social platforms can drive traffic and backlinks.

If you have a local business, optimize your Google My Business listing and get listed in local directories. Use social media to share your content and build a community. SEO-wise, engaging with your audience on social platforms can drive traffic and backlinks. Review and Set KPIs

Review and Set KPIs : A fun nod to Ahrefs’ own search engine!

: A fun nod to Ahrefs’ own search engine!

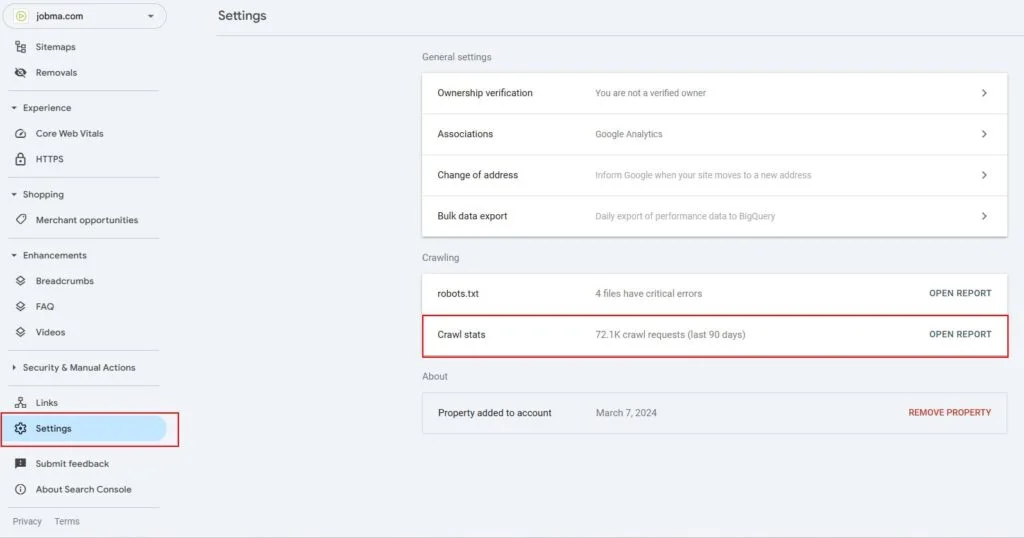

Format errors in robots.txt

Format errors in robots.txt Issues with blocked internal resources in robots.txt

Issues with blocked internal resources in robots.txt Issues with blocked external resources in robots.txt

Issues with blocked external resources in robots.txt 1. It Dilutes Your Rankings When several of your pages compete for the same keyword, Google doesn’t know which one to rank higher — reducing the potential performance of all pages involved.

1. It Dilutes Your Rankings When several of your pages compete for the same keyword, Google doesn’t know which one to rank higher — reducing the potential performance of all pages involved.

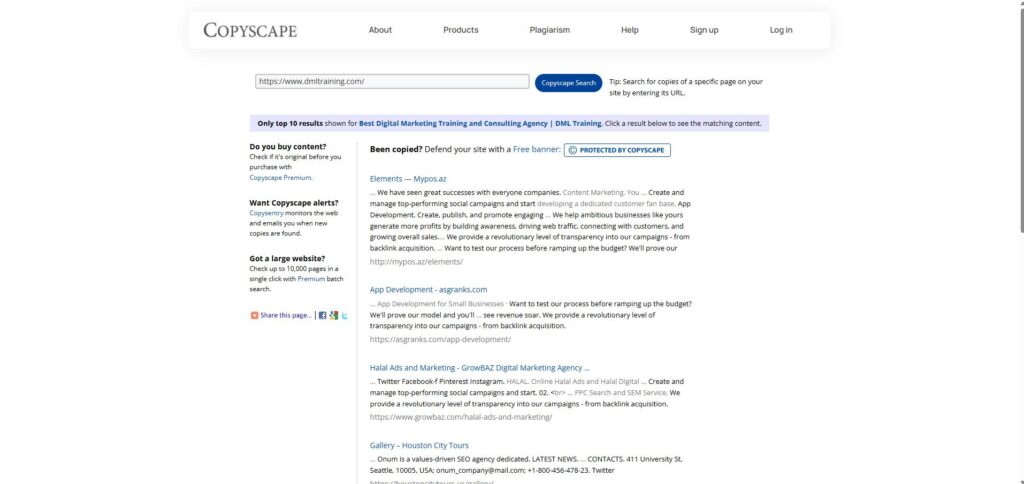

Audit Your Content

Audit Your Content