BrightonSEO October 2025 Slides

I spoke at BrightonSEO UK yesterday (23rd October 2025).

The title of the talk was How Google’s Search Index Works.

You can get the slides to the talk below.

I spoke at BrightonSEO UK yesterday (23rd October 2025).

The title of the talk was How Google’s Search Index Works.

You can get the slides to the talk below.

Subscribe to the newsletter to get more unique indexing insight straight to your inbox…

…or watch a demo of Indexing Insight which helps large-scale sites diagnose indexing issues.

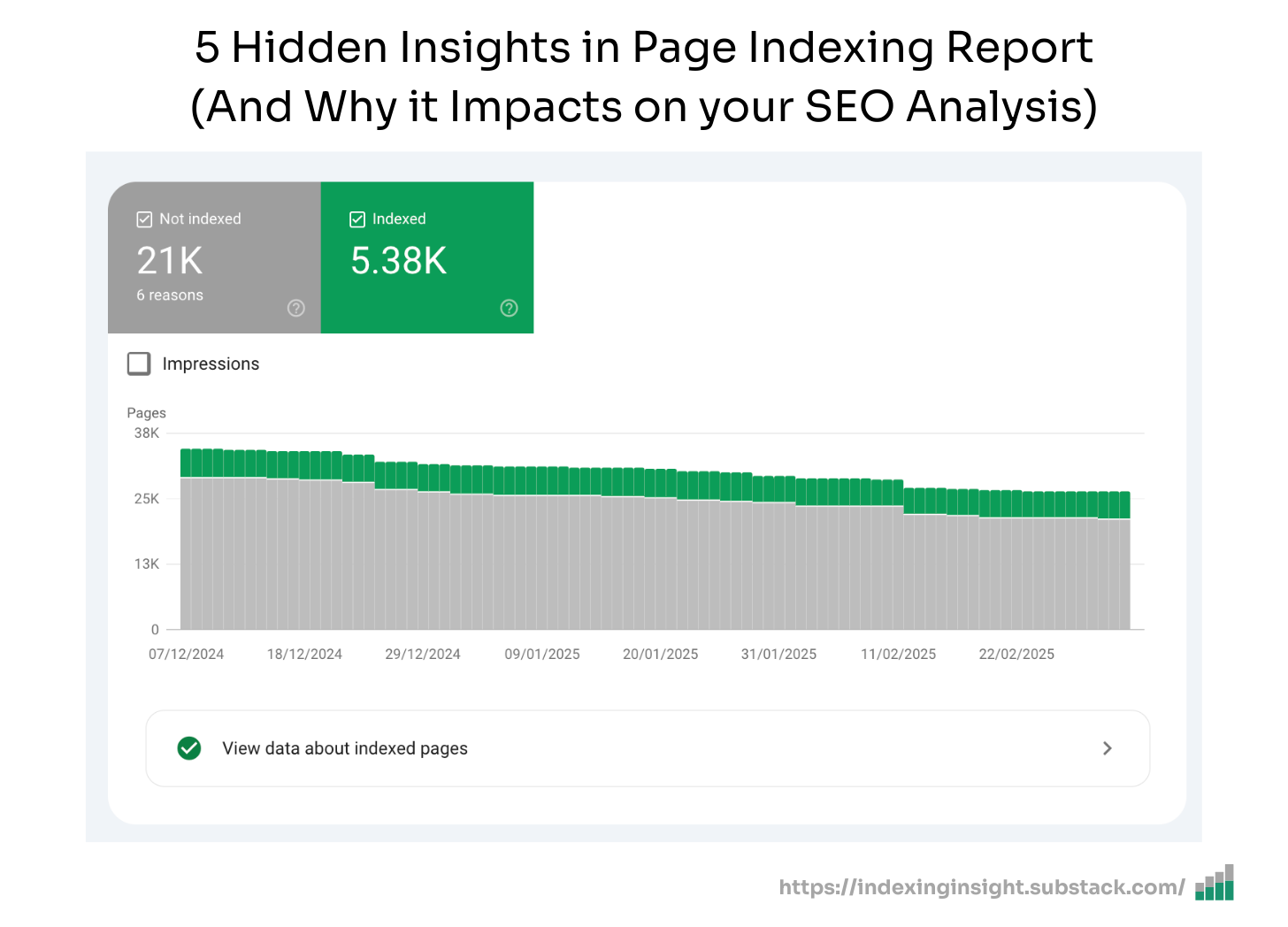

Many SEO teams misunderstand what the Page Indexing Report shows.

This confusion leads to poor decisions about technical SEO work. Understanding how Google’s index works helps you prioritise the right fixes.

This newsletter will cover the following topics:

What is Google’s index?

Page Indexing Report Explained

The difference between Indexed vs Not Indexed.

First, let’s get a foundational understanding of Google’s Search Index.

The Google Search Index seems complex (because it is)…

…but at a macro-level the Web Index is just a large series of databases that sit on thousands of computers. To quote Gary Illyes:

“The Google index is a large database spread across thousands of computers.” - Gary Illyes, How Google Search indexes pages

This is an important fact to understand because it changes how you look at the data in Google’s Search Console.

The Page Indexing report is a way to see which pages are Indexed and Not Indexed.

I believe that many SEO professionals and businesses widely misunderstood the data in this report.

Many believe that the Page Indexing Report shows you what is stored in Google’s index and what is excluded from Google’s index (databases).

This is inaccurate.

The reality is that Google stores ALL processed information about Indexed and Not indexed pages in it’s index.

For example, you can pull stored information from Google’s index for Not Indexed pages in the URL Inspection Tool and API.

Google’s URL Inspection Tool will provide you with the current data from it’s index.

When you’re looking at the Page Indexing Report remember: You are looking at ALL the processed and stored page indexing data about your website.

If the Page Indexing Report shows ALL stored and processed information in Google’s index. Then what is the difference between Indexed and Not Indexed?

The difference between the two verdicts is:

Indexed - Eligible to appear in Google’s search results.

Not Indexed - Not Eligible to appear in Google’s search results.

If a page is marked as Indexed it’s eligible to appear in Google’s search results.

This means that the content and page URL can be served to users in Google’s search engine results. It doesn’t garuntee it but the page can be shown.

And yes, a page needs to be Indexed for content or passage to appear in this also AI Search features.

If a page is marked as Not Indexed it’s eligible to appear in Google’s search results.

This means that the content and page URL will not be served to users in Google’s search engine results. And you can see the reasons why the processed pages are not eligable to appear in Google search engine results.

The Page Indexing report in Google Search Console is widely misunderstood by SEO professionals and business teams.

Many think it shows pages stored in Google’s index and not stored in the index.

In reality the Page Indexing Report shows all the processed pages in Google’s Search index about a website. Not just the indexed pages.

The difference between Indexed vs Not Indexed is:

Indexed - Eligible to appear in Google’s search results.

Not Indexed - Not Eligible to appear in Google’s search results.

Everything in the Page Indexing report is processed and stored in Google’s index.

What you’re looking at are pages that have the potential to be shown to users in search results and pages that Google has decided NOT to show to users.

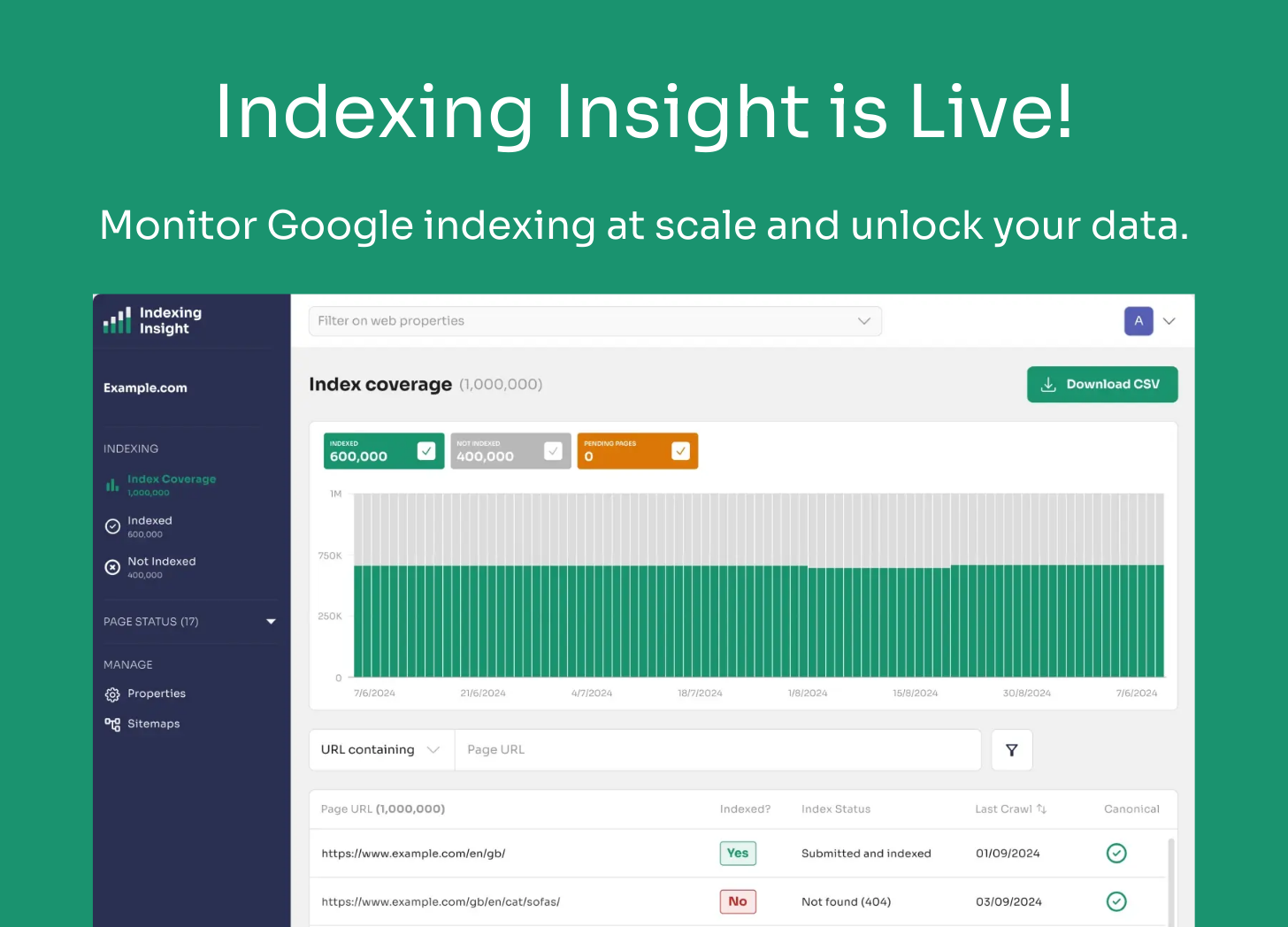

Indexing Insight is a Google indexing intelligence tool for SEO teams who want to identify, prioritise and fix indexing issues at scale.

Watch a demo of the tool below to learn more 👇.

Subscribe to the newsletter to get more unique indexing insight straight to your inbox…

…or watch a demo of Indexing Insight which helps large-scale sites diagnose indexing issues.

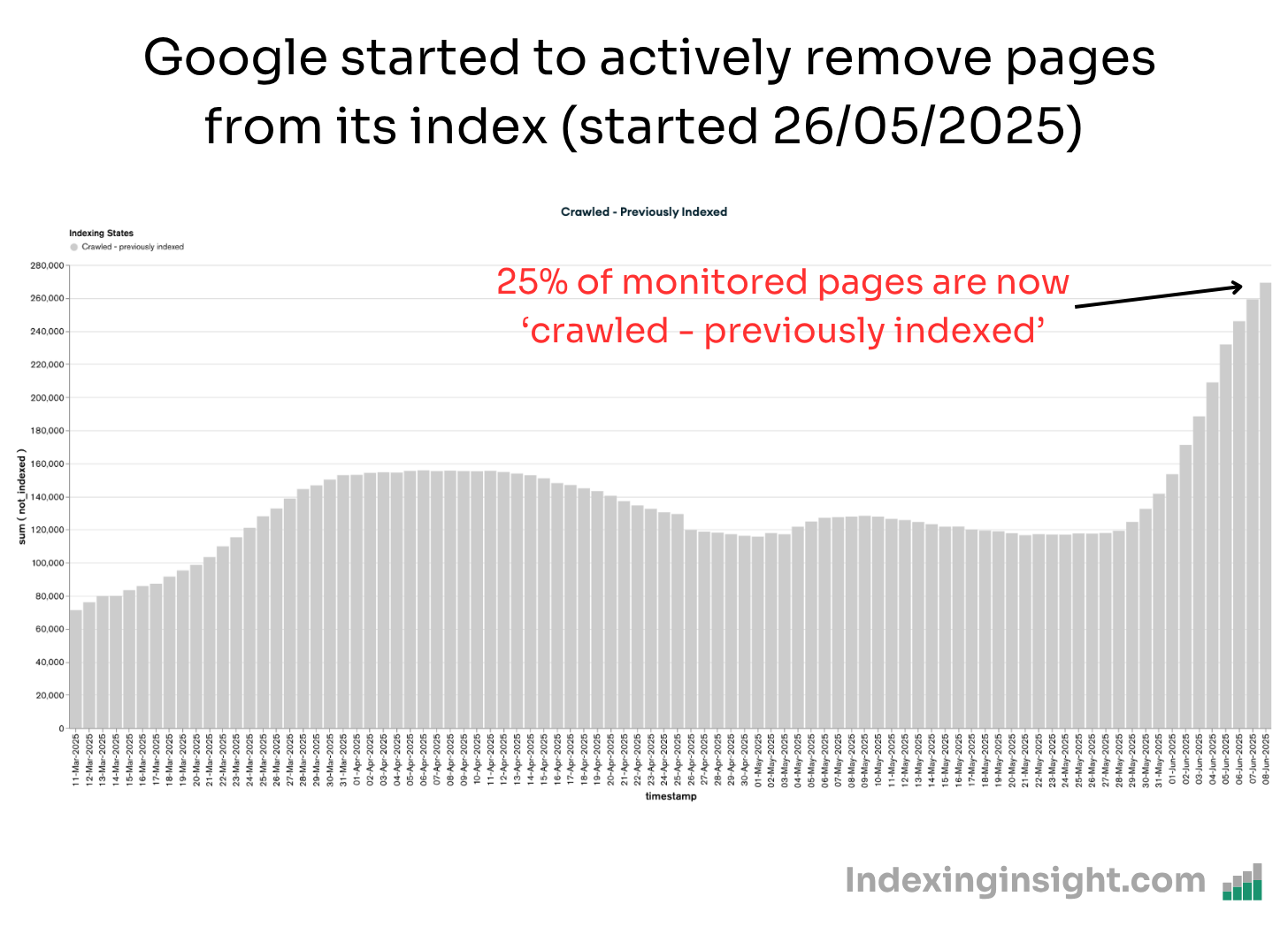

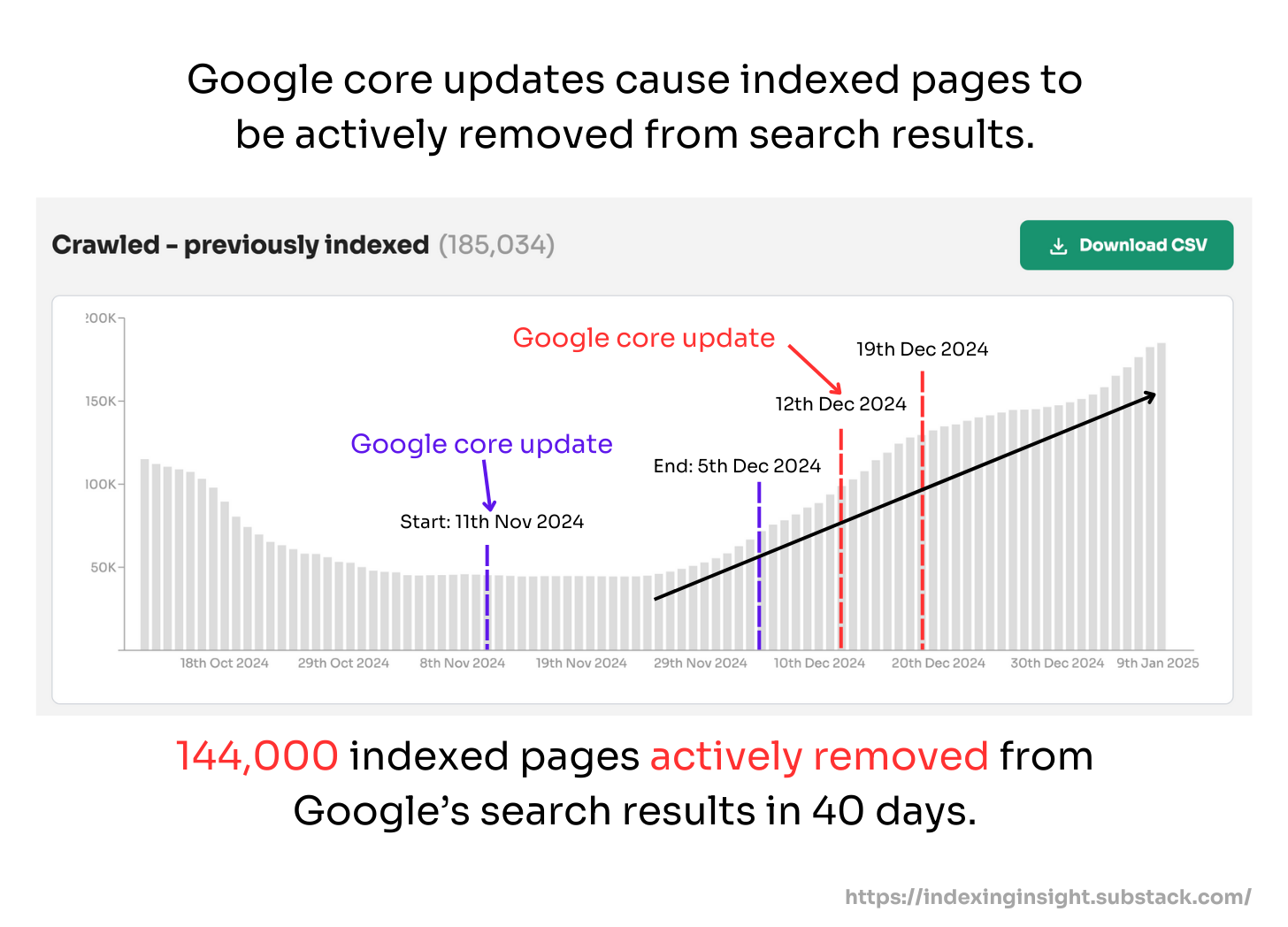

At Indexing Insight, we noticed a HUGE number of pages being actively removed from Google's search results at the end of May 2025.

This indexing purge was so large it caused many SEO professionals to notice that entire websites were being actively removed from Google's search index.

However, the current understanding of this purge is incomplete.

In this newsletter, I'll explain what really happened during the May 2025 index purge and why Google's official explanation doesn't tell the whole story.

I'll show evidence that this wasn't just "normal fluctuation" but one of the largest content purge we've ever tracked.

So, let's dive in.

I’ve broken down the findings and analysis into 5 parts:

Google indexing purge

25% of monitored pages were actively removed

Google broke the 130-Day Indexing rule

15% - 75% of indexed pages removed

Why Google removed the pages

At the end of May 2025, we noticed a massive increase in the number of pages that were being actively removed by Google’s Search index.

I raised that Google had made a MASSIVE update to its search index on LinkedIn.

The reaction to the quick post on LinkedIn, and on Twitter, was massive.

Many people reached out to me and provided screenshots of their Page Indexing report in Google Search Console.

This story, and screenshots, were also picked up by Barry Schwartz on SEORoundtable.

Whatever Google did at the end of May 2025 it had a huge impact on a large portion of its index. And caused many websites to have their indexed pages to be removed from Google’s index.

But why were these pages removed? And is this different to any other Google core update?

I dug into the Indexing Insight data to find out.

Since May 26th 2025 over 25% of monitored URLs have the indexing state ‘crawled - previously indexed’.

When an indexed page is actively removed from Google’s Search results the indexing state changes from ‘submitted and indexed’ to ‘crawled - currently not indexed’.

Since monitoring back in early 2024 we have not seen this level of active removal by Google across SO many websites.

Note: At Indexing Insight we noticed this pattern over a year ago and we created a new report ‘crawled - previously indexed’.

This new report helps our customers to identify exactly which pages are being actively removed from Google’s search results. And it’s this data that can be aggregated together and be shown over the last 90 days.

Google recrawled URLs in the last 90-130 days and then actively deindexed pages.

Previously, we (and others) have identified the 130-day indexing rule. The rule is simple: After 130 days of not being crawled a page is actively removed from Google’s search results (going from indexed to not indexed).

However, starting from May 26th this pattern reversed and it seems Google actively removed pages it had recrawled in 90-130 days.

In fact, comparing the days since last crawl time buckets before and after May 25 reveals that Not Indexed pages doubled or tripled.

What does this mean?

It means that Google didn’t wait the usual 130 days since last crawl to collect signals around these pages.

Instead, Google crawled or recrawled pages over the last 3 months and decided not to wait to deindex pages.

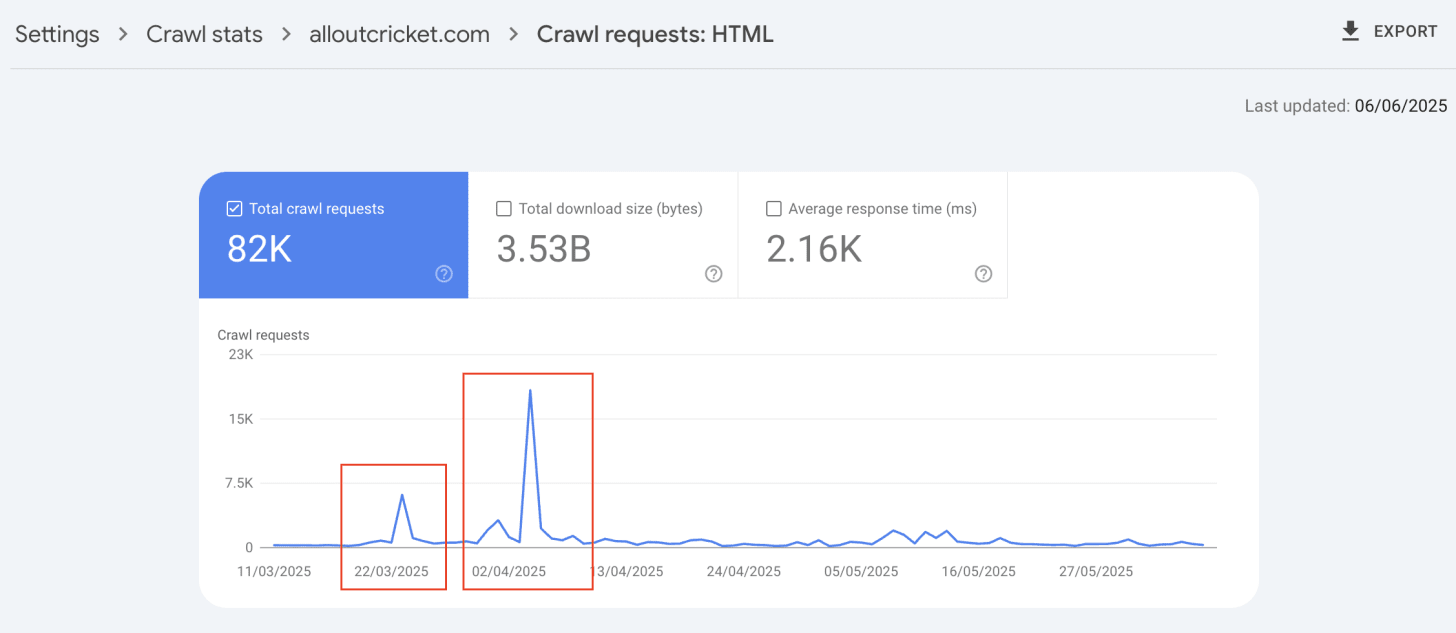

This can be seen in Google Search Console > Crawl Stats report.

From the accounts we have access to that saw 50-75% pages deindexed we can see in Crawl Stat reports that Googlebot crawling spike between March…

…and early May 2025.

Note: The spikes in crawling might be nothing to do with the indexing purge. As we’ve seen at Indexing Insight that crawling and deindexing aren’t always connected.

In fact the longer it takes for a live page to be crawled the greater the chance a page will be deindexed.

We don’t know if this 90-day indexing rule is here to stay or if this is just a one-off by Google to purge its index of low-quality content.

Here are some of my theories:

Threshold update - A test they are running to see the impact of tweaking the quality thresholds in its index to remove low-quality pages faster from search results.

Seasonal update - Google does “deindex” pages due to seasonal demands and they might be archiving indexed pages to make room for other more important pages.

Core update - Google may be getting ready to run a BIG core update and the index is just reacting to the new mini algorithms.

Your guess is as good as mine. But one thing we know is that something changed.

At Indexing Insight, we have a unique report called ‘crawled - previously indexed’.

This unique report tells us exactly which indexed pages have been actively deindexed by Google. This allows us to see exactly the impact of the May/June 2025 update.

In May-25 we’ve seen websites get 15% - 75% of monitored pages moved into the ‘crawled - previously indexed’ report.

The interesting thing about monitoring different websites is the variation in data.

What was interesting about this update was that not all websites saw such a huge spike in indexed pages being actively removed from the index.

Although they saw an increase in ‘crawled - previously indexed’ this was still only 1% - 3% of all monitored pages.

What is interesting is that not all websites seem to impacted by this May-25 index update. Some were impacted more than others…

…they question is what is causing these pages to be deindexed?

That’s what I found out in the final part of this article.

The reason pages are being actively removed is because of a lack of user engagement.

After analysing both the Search Analytics data we have access to AND reviewing the types of pages being actively removed, the pattern is clear.

Google actively purged a lot of “poor performing” pages from its index in May 2025.

There are two key reasons why this trend is clear when you review the data:

Zero or low-engagement pages

Zero impact on SEO performance

When reviewing the pages that we have Search Analytics data we noticed the same pattern: Pages actively deindexed by Google had low or zero SEO performance.

Let me show you some examples.

When checking the SEO performance of pages for atmlocation.pro you can see that the page did appear in Google Search. But barely had any clicks or impressions over the last 12 months.

For another publishing website, you can see that the page had a large spike in engagement and then nothing.

Finally, blog articles from a website with a lack of SEO performance (clicks and impressions) were actively deindexed by Google.

The same pattern is seen over and over again when reviewing pages that were actively deindexed in Google’s Search index.

Pages that had poor performance in Googe Search were actively purged.

The indexing purge had zero impact on the SEO performance of websites.

As you can see from the screenshot below, the removal of indexed pages has had zero impact on SEO clicks or impressions after late May-25 or early June-25.

This didn’t just happen to 1 website but other websites we had access to saw either no decline or a positive trend in clicks and impressions.

The screenshot below is of a website that had 75% of its important pages actively removed by Google’ Search index. However, it still saw a positive improvement in clicks and impressions during the June core update.

This further shows that Google actively removed a TONNE of inactive documents from its search index.

If thousands of pages get actively deindexed and it has zero impact on impressions or clicks…were those pages of use anyway?

Something happened in Google’s Search index at the end of May 2025.

And based on the reaction from the SEO community and website owners, the great purge impacted A LOT of websites. Of all shapes and sizes.

John Mueller, a Webmaster Trend Analyst at Google, replied to the comments of website owners on Bluesky (source) who saw massive drops in the number of indexed pages at the end of May-25:

“Thanks, everyone, for the sample URLs - very helpful. Based on these, I don't see a technical issue on our (or on any of the sites) side. Our systems make adjustments in what's crawled & indexed regularly. That's normal and expected. This is visible in any mid-sized+ website - you will see the graphs for indexing fluctuate over time. Sometimes the changes are smaller, bigger, up, or down. Our crawl budget docs talk about crawl capacity & crawl demand, they also play a role in indexing.” - John Mueller

The key thing to highlight here is that John mentioned that there was no “technical issues” on Google’s side. And there is a link between capacity/demand in indexing.

This lines up with what we’ve been seeing at Indexing Insight. However, we have NEVER seen such a large number of documents actively removed from Google’s index.

Our own customer data showed that 15-75% of indexed pages were actively deindexed by Google. These weren’t just small websites or brands. They were big, medium and small brands.

The common factor in why so many pages were deindexed?

Based on the available data, the most likely explanation was that Google purged a HUGE number of documents that didn’t drive any meaningful engagement (clicks, queries, swipes, impressions, etc.) from its search index.

This lines up with how Google’s Search Index works (based on Google patents).

The problem is that based on the data, Google’s index didn’t wait around the usual 1 - 130 days. Instead, the index seemed to purge content within days of being recrawled.

Why?

No idea. But we can make an educated guess. Here are a few ideas:

Seasonal search demand: Google needed to make more room within its index for a growing demand for more content within a topic/niche.

Core update: Google made updates to its system to get ready for its core update (which happened in June 2025), and the quality threshold increased which caused inactive pages which did not meet this threshold to be deindexed.

Quality threshold update: Google updated its quality threshold, based on stored signals in the index, which means moving forward it will get harder to get pages indexed.

These are all just ideas. And they might all be right…but also all be completely wrong.

Whatever happened in May-25 it’s clear to those who are tracking Google indexing that SOMETHING happened. And those pages that were removed from the index had zero engagement or value to Google.

Indexing Insight is a Google indexing intelligence tool for SEO teams who want to identify, prioritise and fix indexing issues at scale.

Watch a demo of the tool below to learn more 👇.

Subscribe to the newsletter to get more unique indexing insight straight to your inbox…

…or watch a demo of Indexing Insight which helps large-scale sites diagnose indexing issues.

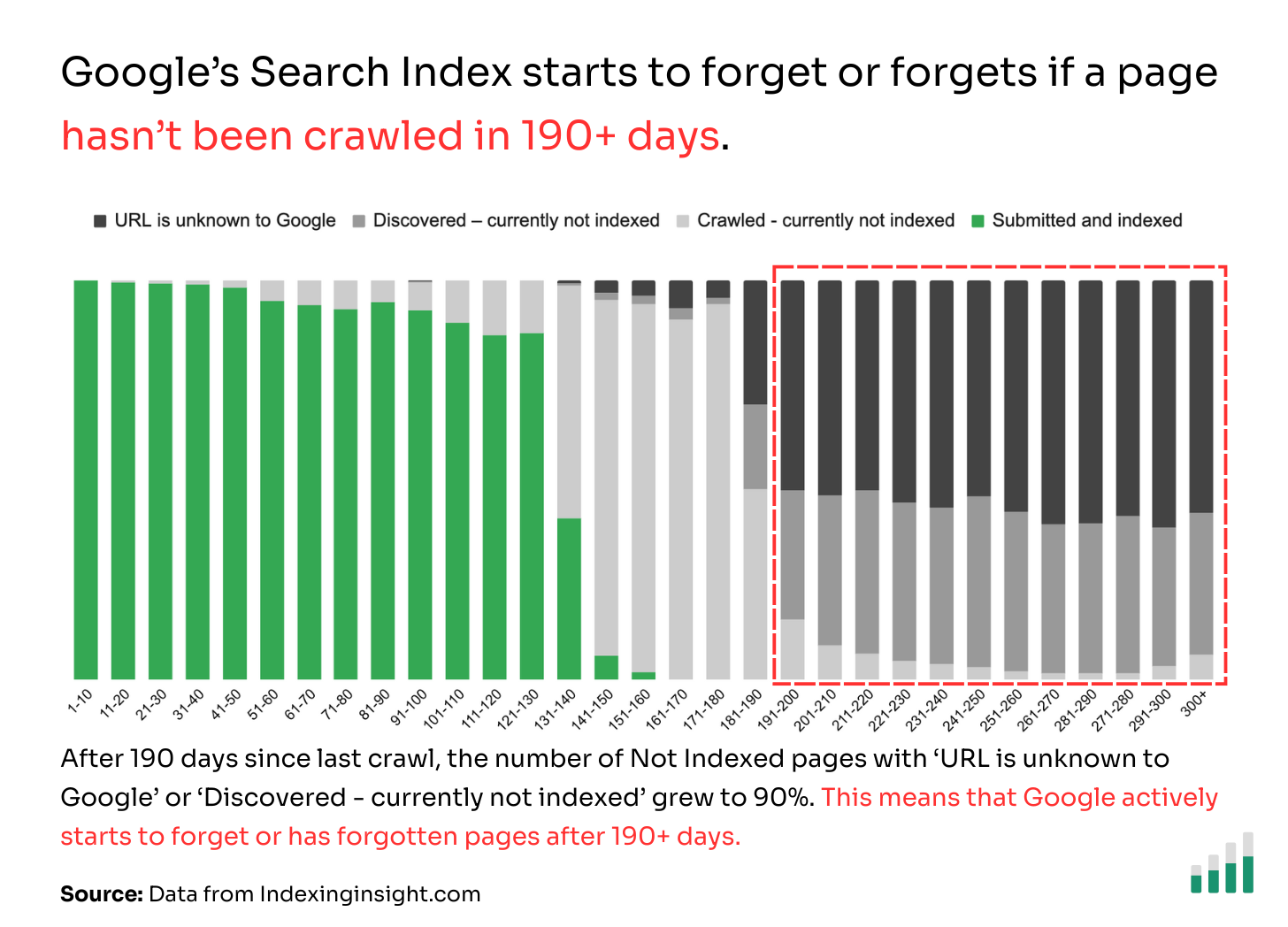

At Indexing Insight, a study has uncovered a 190-Day Not Indexed rule.

After 190 days since last crawl, Googlebot "forgets" a Not Indexed page even exists. This rule is based on a study of 1.4 million pages across 18 different websites (see methodology for more details).

Our study focused on combing the Days Since Last Crawl (based on Last Crawl Time) and the index coverage states from the URL Inspection API.

In this newsletter, I'll explain the 190-day rule for page forgetting and how it affects your SEO strategy.

Let's dive in.

The indexing data pulled in this study is from Indexing Insight. Here are a few more things to keep in mind when looking at the results:

👥 Small study: The study is based on 18 websites that use Indexing Insight of various sizes, industry types and brand authority.

⛰️ 1.4 million pages monitored: The total number of pages used in this study is 1.4 million and aggregated into categories and analysed to identify trends.

🤑 Important pages: The websites using our tool are not always monitoring ALL their pages, but they monitor the most important traffic and revenue-driving pages.

📍 Submitted via XML sitemaps: The important pages are submitted to our tool via XML sitemaps and monitored daily.

🔎 URL Inspection API: The Days Since Last Crawl metric is calculated using the Last Crawl Time metric for each page is pulled using the URL Inspection API.

🗓️ Data pulled at the end of March: The indexing states for all pages were pulled on 6/05/2025.

Only pages with last crawl time included: This study has included only pages that have a last crawl time from the URL Inspection API for both indexed or not indexed pages.

Quality type of indexing states: The data has been filtered to only look at the following quality indexing state types: ‘Submitted and indexed’, ‘Crawled - currently not indexed’, ‘Discovered - currently not indexed’ and ‘URL is unknown to Google’. We’ve filtered out any technical or duplication indexing errors.

Googlebot forgets or has forgotten pages that have not been crawled in 190 days.

Our data from 1.4 million pages across multiple websites shows that if a page has not been crawled in 190+ days then there is a 90% chance the page will be either start to be forgetten or forgotten by Google Search.

Below is the raw data to understand the scale of the pages in each category.

How did we come to this conclusion when looking at this data?

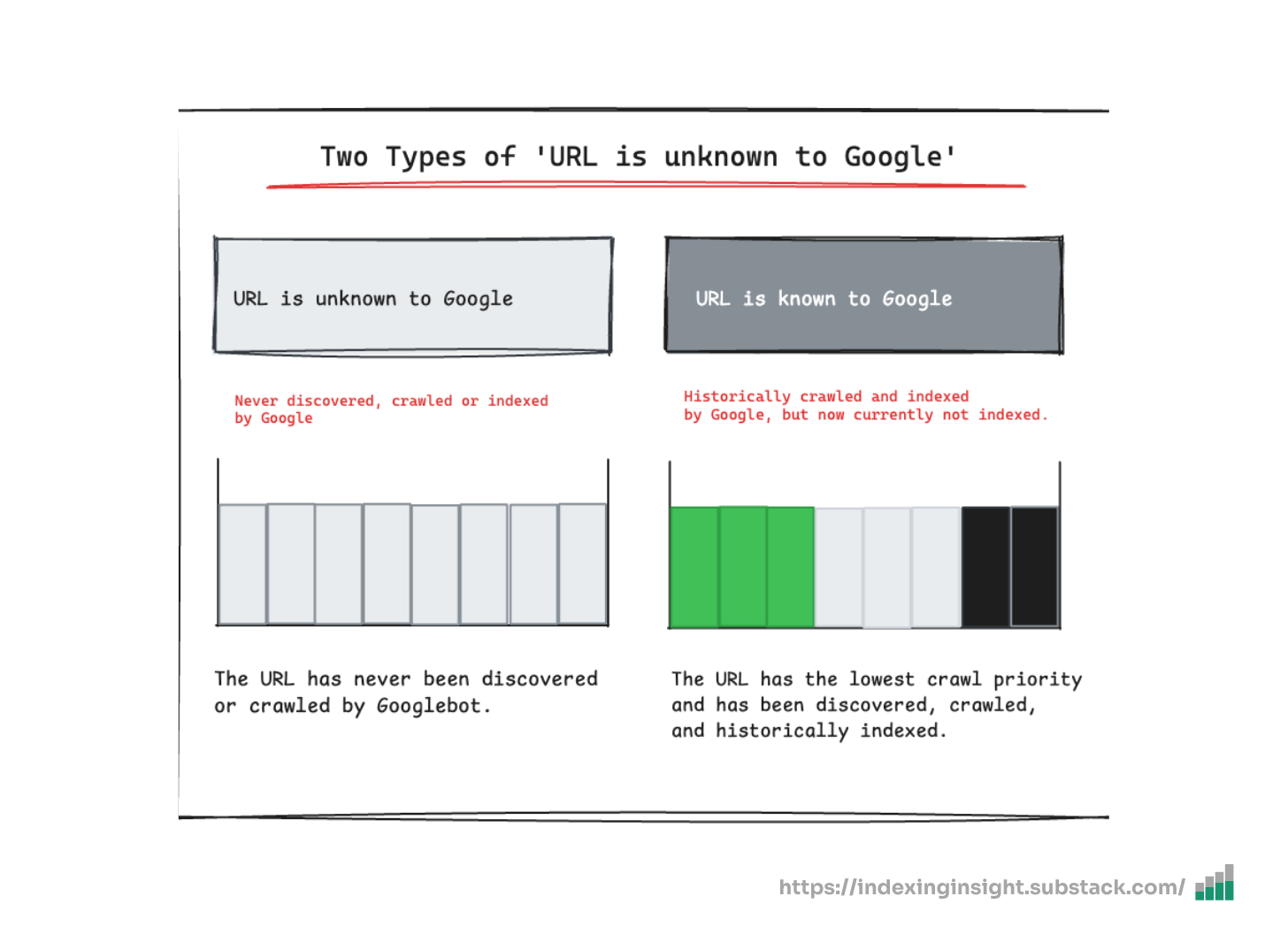

Over the last 12 months while building the tool we’ve noticed that three indexing states (‘Crawled - currently not indexed’, ‘discovered - currently not indexed’, and ‘URL is unknown to Google’) changed based on the URL's crawl priority.

Our research highlighted that the definition of these 3 not indexed coverage states in Google Search Console needs to change:

Crawled - currently not indexed: The page has either been discovered, crawled but not indexed OR the historically indexed page has been actively removed from Google’s search results.

Discovered—currently not indexed: A new page has been discovered but not yet crawled, OR Google is actively forgetting the historically indexed page.

URL is unknown to Google: A page has never been seen by Google OR Google has actively forgotten the historically crawled and indexed pages.

Note: You can read more about our research and data here:

After seeing this trend multiple times across different customer sites, we did some research into Google’s Search index to understand why this happened.

Based on our research we found that Google’s Search index is designed to actively remove pages from its search results AND forget about them over time.

Note: You can read more about our research and data here:

To quickly summarise the research:

The 3 not indexed coverage states you see in Google Search Console (‘Crawled - currently not indexed’, ‘Discovered - currently not indexed’, and ‘URL is unknown to Google) reflect the crawl priority of those pages.

As a page becomes forgotten it moves through these 3 not indexed coverage states. Eventually reaching the ‘URL is unknown to Google’.

This research was supported by a comment from Gary Illyes when asked on LinkedIn why historically crawled and indexed pages can move to ‘URL is unknown to Google’:

“Those have no priority (URL is known to Google); they are not known to Google (Search) so inherently they have no priority whatsoever. URLs move between states as we collect signals for them, and in this particular case the signals told a story that made our systems "forget" that URL exists. I guess you could say it actually fell out the barrel altogether.”

The reply here mentions that URLs move between “states” as Google’s system picked up signals over time (which backs up our own research). And that historically crawled and indexed pages can eventually move to ‘URL is unknown to Google’.

To quote Gary, Google’s systems will eventually “forget” that a URL exists.

The data from Indexing Insight gives us the ability to measure and monitor how long it takes for Google to ‘forget’ a URL. All by using the Last Crawl Time metric.

If we combine the data from the 130-day indexing rule study we can build a picture of how long it takes for a page to be forgotten by Google’s Search index:

✅ 1-130 days: Between 1 - 130 days of being crawled 90% of the pages are ‘submitted and indexed’.

❌ 131-180 days: Between 131 - 190 days 50% - 90% of the not indexed pages have ‘crawled - currently not indexed’ index coverage state.

👻 190+ days: After 190 days since the pages were crawled 90% of the pages are made up of ‘Discovered - crawled currently not indexed’ or ‘URL is unknown to Google’.

If we layer this data over how Google’s Search index (might) work diagram we can now fill in the gaps for the different crawl priority “tiers”.

After a page has been deindexed it doesn’t take long for it to be “forgotten” by Google (zero priority) in the crawling queue.

After just 60 days of not being crawled a page can go from ‘Crawled - currently not indexed’ to ‘Discovered - currently not indexed’ or ‘URL is unknown to Google’.

It can take 4 months for Google to actively remove a page from search results (indexed to not indexed) but only 2 months for not indexed pages to start to be forgotten by Google (meaning zero or close to zero crawling priority).

SEO teams can make educated guesses on crawl frequency reflecting how important a page is to Google but our study (and research) should remove a lot of guesswork.

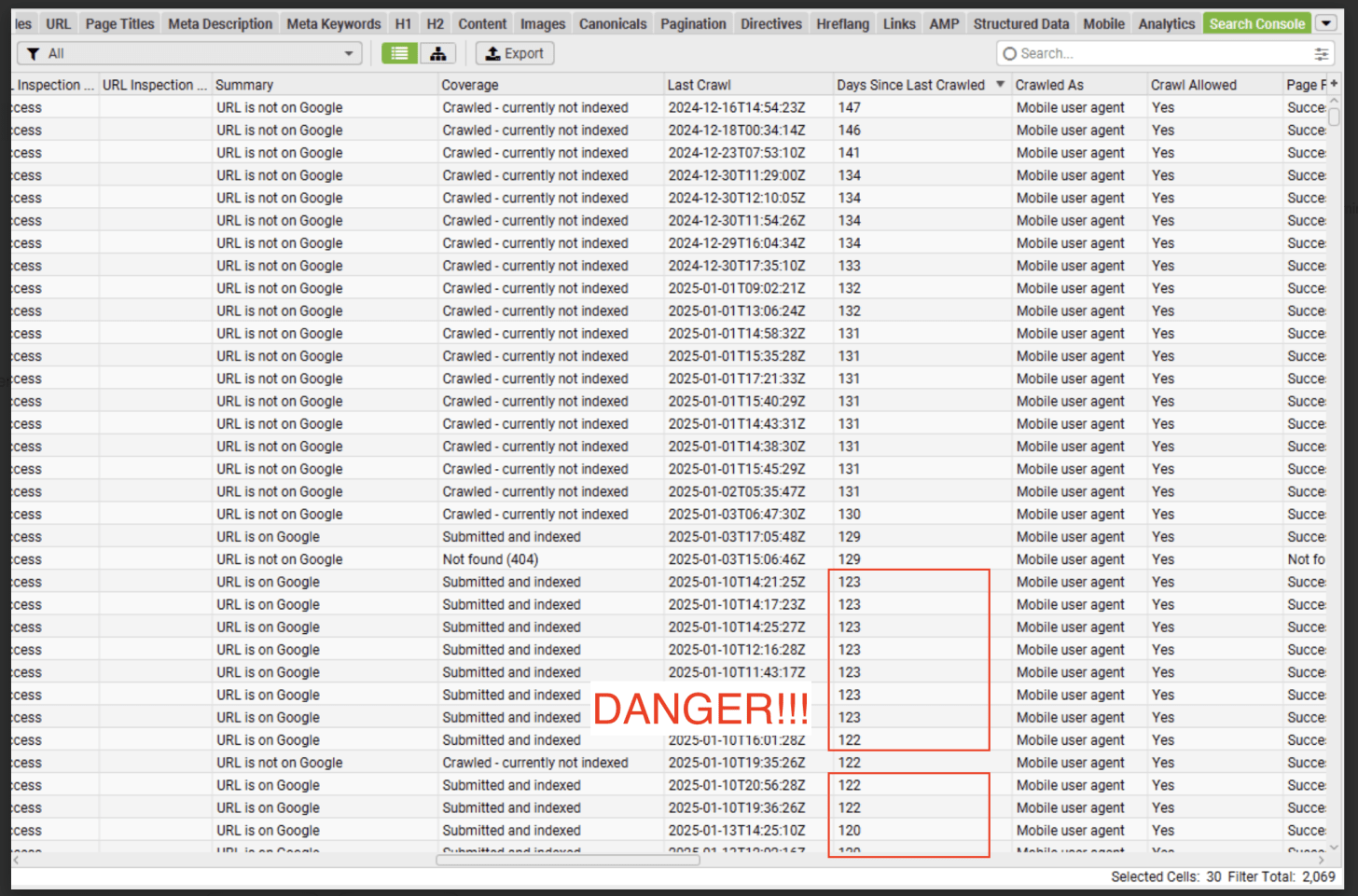

Now we have a clear set of benchmarks that we can use to inform our SEO strategies.

For example, when using URL Inspection API with Screaming Frog you can now start to understand the crawl priority of your indexed and not indexed pages.

By understanding the crawl priority of your pages using index coverage states you can also start to uncover quality issues on your website. AND start to identify which indexed pages are at risk of being deindexed.

At Indexing Insight we’re working hard in the background to group pages into Days Since Last Crawl reports to help customers identify unique SEO insights that can help inform content quality.

Indexing Insight is a Google indexing intelligence tool for SEO teams who want to identify, prioritise and fix indexing issues at scale.

Watch a demo of the tool below to learn more 👇.

Subscribe to the newsletter to get more unique indexing insight straight to your inbox 👇.

If a page hasn’t been crawled in the last 130 days, it gets deindexed.

This is the idea that Alexis Rylko put forward in his article: Google and the 130-Day Rule. Alexis identified that by using Days Since Last Crawl metric in Screaming Frog + URL Inspection API you can quickly identify pages at risk of becoming deindexed.

Pages are at risk of being deindexed if they have not been crawled in 130 days.

At Indexing Insight we track Days Since Last Crawl for every URL we monitor for over 1 million pages. And decided to run our own study to see if there is any truth to 130 day indexing rule.

Side note: The 130 day indexing rule isn’t a new idea. A similar rule was identified by Jolle Lahr-Eigen and Behrend v. Hülsen who found that Googlebot had a 129 days cut off in its crawling behavior in a customer project in January 2024.

The indexing data pulled in this study is from Indexing Insight. Here are a few more things to keep in mind when looking at the results:

👥 Small study: The study is based on 18 websites that use Indexing Insight of various sizes, industry types and brand authority.

⛰️ 1.4 million pages monitored: The total number of pages used in this study is 1.4 million and aggregated into categories and analysed to identify trends.

🤑 Important pages: The websites using our tool are not always monitoring ALL their pages, but they monitor the most important traffic and revenue-driving pages.

📍 Submitted via XML sitemaps: The important pages are submitted to our tool via XML sitemaps and monitored daily.

🔎 URL Inspection API: The Days Since Last Crawl metric is calculated using the Last Crawl Time metric for each page is pulled using the URL Inspection API.

🗓️ Data pulled at the end of March: The indexing states for all pages were pulled on 17/04/2025.

Only pages with last crawl time included: This study has included only pages that have a last crawl time from the URL Inspection API for both indexed or not indexed pages.

Quality type of indexing states: The data has been filtered to only look at the following quality indexing state types: ‘Submitted and indexed’, ‘Crawled - currently not indexed’, ‘Discovered - currently not indexed’ and ‘URL is unknown to Google’. We’ve filtered out any technical or duplication indexing errors.

The 130-day indexing rule is true. BUT it’s more of an indicator than a hard rule.

Our data from 1.4 million pages across multiple websites shows that if a page has not been crawled in the last 130 days then there is a 99% chance the page is Not Indexed.

However, there are Not Indexed pages crawled in less than 130 days.

This means that the 130-day rule is not a hard rule but more of an indicator that your pages might be deindexed by Google.

The data does show that the longer it takes for Googlebot to crawl a page, the greater the chance that the page will be Not Indexed. But after 130 days, the number of Not Indexed pages jumps from around 10% to 99%.

Below is the raw data to understand the scale of the pages in each category.

We broke down the data into last crawl buckets between 100 - 200 days.

The data shows that between 100 - 130 days the index coverage is between 94% - 85%.

But, after 131 days, the Not Index coverage shoots up.

The Not Indexed coverage state goes from 68% to 100% between 131 and 151 days. There are still pages indexed after 131 days, but the Index coverage reduces significantly between 131 - 150 days.

After 151 days, there are 0 indexed pages.

Below is the raw data to understand the scale of the pages in each crawl bucket.

The 130-day indexing rule is an indication that your pages will get deindexed.

However, it’s not a hard rule. There will be Not Indexed pages that can be crawled in the last 130 days. Based on the data, 2 rules stand out when it comes to tracking days since last crawl:

🔴 130-day rule: If a page hasn’t been crawled in the last 130 days, then there is a 99% chance the page will not be indexed.

🟢 30-day rule: If the page has been crawled in the last 30 days, then there is a 97% chance the page is indexed.

The longer it takes for Googlebot to crawl your pages (130+ days), the greater the chance the pages will be not indexed.

This shouldn’t come as a surprise to many experienced SEO professionals.

The idea of crawl optimisation and tracking days since last crawl is not new. Over the last 10 years, many SEO professionals like AJ Kohn have talked about the idea of CrawlRank. And Dawn Anderson has provided deep technical explanations on how Googlebot crawling tiers work.

To summarise their work in a nutshell:

Pages crawled less frequently compared to your competitors receive less SEO traffic. You win if you get your important pages crawled more often by Googlebot than your competition.

The issue has always been tracking days since the last crawl for many websites at scale and identifying the exact length of time when pages are deindexed.

However, at Indexing Insight, we can automatically track the last crawl time for every page we monitor AND calculate the days since last crawl for every page.

What does this mean?

It means very soon we’ll be able to add new reports to Indexing Insight that allow customers to, at scale, identify which important pages are at risk of being deindexed. And allow you to monitor how long it takes for Googlebot to crawl important pages for your website.

Crawl Budget Optimisation: You are what Googlebot Eats by AJ John

Large site owner's guide to managing your crawl budget by Google

Negotiating crawl budget with googlebots by Dawn Anderson

Indexing Insight is a Google indexing intelligence tool for SEO teams who want to identify, prioritise and fix indexing issues at scale.

Watch a demo of the tool below to learn more 👇.

Indexing Insight is a Google indexing intelligence tool for SEO teams who want to identify, prioritise and fix indexing issues at scale.

Watch a demo of the tool below to learn more 👇.

Google is actively removing pages from its search results.

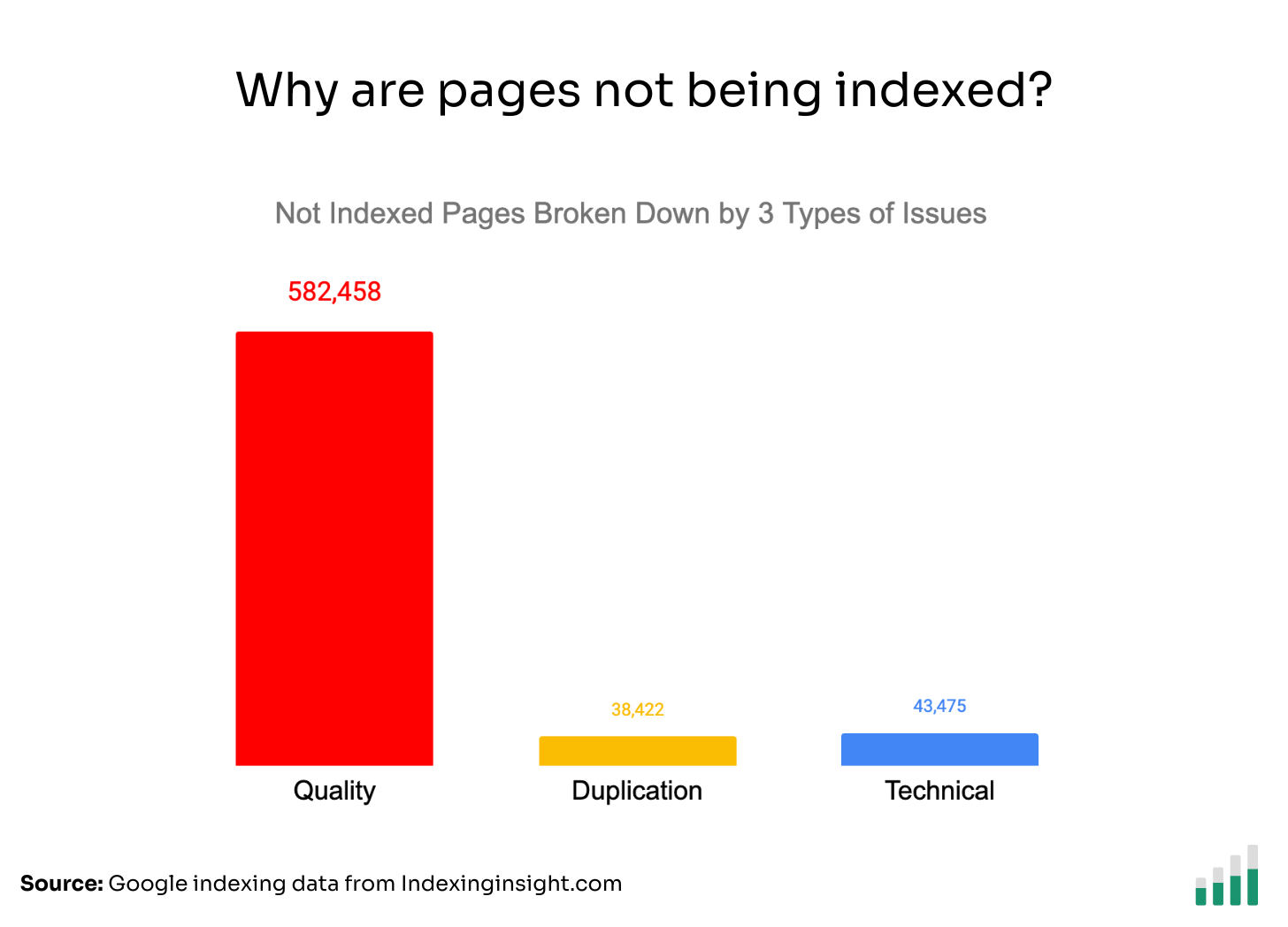

At Indexing Insight, we analysed the indexing data of 1.7 million pages across 18 websites. And found that 88% of not indexed pages were due to quality issues.

Important pages are actively being removed and forgotten by Google.

It doesn’t matter if you’re a large or small website. A big brand or a small brand. The trend is always the same. The biggest reason why your pages are not indexed is that they are actively being removed and forgotten by Google’s index.

Let’s dive into the methodology and findings.

The indexing data pulled in this study is from Indexing Insight. Here are a few more things to keep in mind when looking at the results:

👥 Small study: The study is based on 18 websites that use Indexing Insight of various sizes, industry types and brand authority.

⛰️ 1.7 million pages monitored: The total number of pages used in this study is 1.7 million and aggregated into categories and analysed to identify trends.

🤑 Important pages: The websites using our tool are not always monitoring ALL their pages, but they monitor the most important traffic and revenue-driving pages.

📍 Submitted via XML sitemaps: The important pages are submitted to our tool via XML sitemaps and monitored daily.

🔎 URL Inspection API: The indexing verdict (Indexed vs Not Indexed) and the indexing state for all the pages have been pulled using the URL Inspection API.

🗓️ Data pulled at the end of March: The indexing states for all pages were pulled on 31/03/2025.

Only inspected pages included: This study has included only pages that have an indexed or not indexed verdict (this means some websites do not have all the data included).

Alright, let’s jump into the findings!

Based on our first-party data, this is what we found (and it surprised us!).

The indexing data shows that marketplace and listing websites have the lowest Indexing Coverage score >70% (the % of pages indexed vs total monitored pages).

News websites had the best Index Coverage score for monitored pages at 97%.

Ecommerce websites didn’t have as many indexing issues, but still had less than 90% Index Coverage score for all the monitored pages.

Side note: Index Coverage score is a metric to indicate the scale of your indexing issues for a website or set of monitored pages. You want to aim for 90% or more.

If we look at the raw indexed vs not indexed page numbers, we can see the scale of the indexing issues for marketplace and listing websites.

They make up a lot of the indexing issues we monitor.

If we layer Moz Brand Authority with our indexing data we can see that even big brands suffer from indexing issues for important traffic and revenue-driving pages.

The indexing data shows that both small and big brands have an Index Coverage score around 85 - 91%.

If we look at the raw data for indexed vs not indexed pages by Moz Brand Authority, we can see the scale of the problem for brands.

The indexing data shows that even big brands suffer from large-scale indexing issues.

Interestingly, if you only focus on ecommerce, marketplace and listing websites, the average Index Coverage score by Moz Brand Authority is lowered.

Big and small brands with Ecommerce, Marketplace and Listing websites suffer from much larger-scale indexing issues than news or blog websites.

This makes sense as these sorts of websites have lots more pages in our data set.

It doesn’t matter how we chop or slice the not indexed pages, the trend is always the same:

Quality indexing issues are the biggest reason why important pages are not indexed.

If we group all the not indexed pages across all the websites into the 3 types of indexing issues, we can see that quality issues make up 88% of all monitored indexing issues.

How do you define quality?

Quality is Indexed pages being actively removed from Google’s search results, and Not Indexed pages actively being forgotten by the search index over time.

Learn more about quality not indexed category here.

This means that the biggest reason why important pages are not indexed is that they are being actively removed from Google’s search results and, over time, forgotten.

Let's break down the quality type by indexing state.

You can see that ‘URL is unknown to Google’ and ‘Discovered - currently not indexed’ make up 67% of the 500,000+ not indexed pages in the quality type.

These might appear to be crawling issues, but are in reality indexing issues.

Our research has found that ‘URL is unknown to Google’, ‘Crawled - currently not indexed’, and ‘Discovered - currently not indexed’ need new definitions. Indexing states for not indexed pages can change over time as Google actively removes and forgets pages.

Side note: You can learn more about our indexing state changes:

Quality indexing issues are impacting different websites and brand sizes.

Even if we group the 3 types of not indexed pages by Moz brand authority, we get the same trend: Quality indexing issues are the biggest reason why important pages are not indexed for big, medium or small brands.

If we group the 3 types of indexing issues by website type, we get the same trend for e-commerce, marketplace and listing websites. Quality indexing issues are the biggest reason important pages are not indexed.

Interestingly, news websites suffer more from technical indexing issues.

Finally, if we then group the 3 types of not indexed pages by website size, we can see the same trend: Quality indexing issues are the biggest reason why important pages are not indexed across both small and large websites.

The only exception to this rule is websites that are monitoring 100,000 - 500,000 pages. As most of the websites in this category are news websites.

The findings from our indexing data were surprising.

Although we’ve seen Google actively remove pages, we were surprised to find the scale of quality issues across all monitored pages.

Our first-party data has uncovered that Google Search Console misreports indexing states for pages being actively forgotten. And that the definitions for ‘crawled - currently not indexed’ and ‘URL is unknown to Google’ need to change. But we never thought that quality issues would be the main reason that important traffic and revenue-driving pages are not indexed.

Especially for marketplace, listing and ecommerce websites with a big or small brand.

There was a core update on the 13th March, which could have contributed to the number of quality issues found in the monitored indexing data. We know that Google core updates don’t just impact on SEO traffic but on the indexing. Still, this shows that there are quality issues on the website, which are causing pages to drop out of the index.

As Google continues to try to improve its core algorithms, we might see more important traffic and revenue-driving pages drop out of Google’s index.

Ever wondered why some of your important pages aren't indexed in Google?

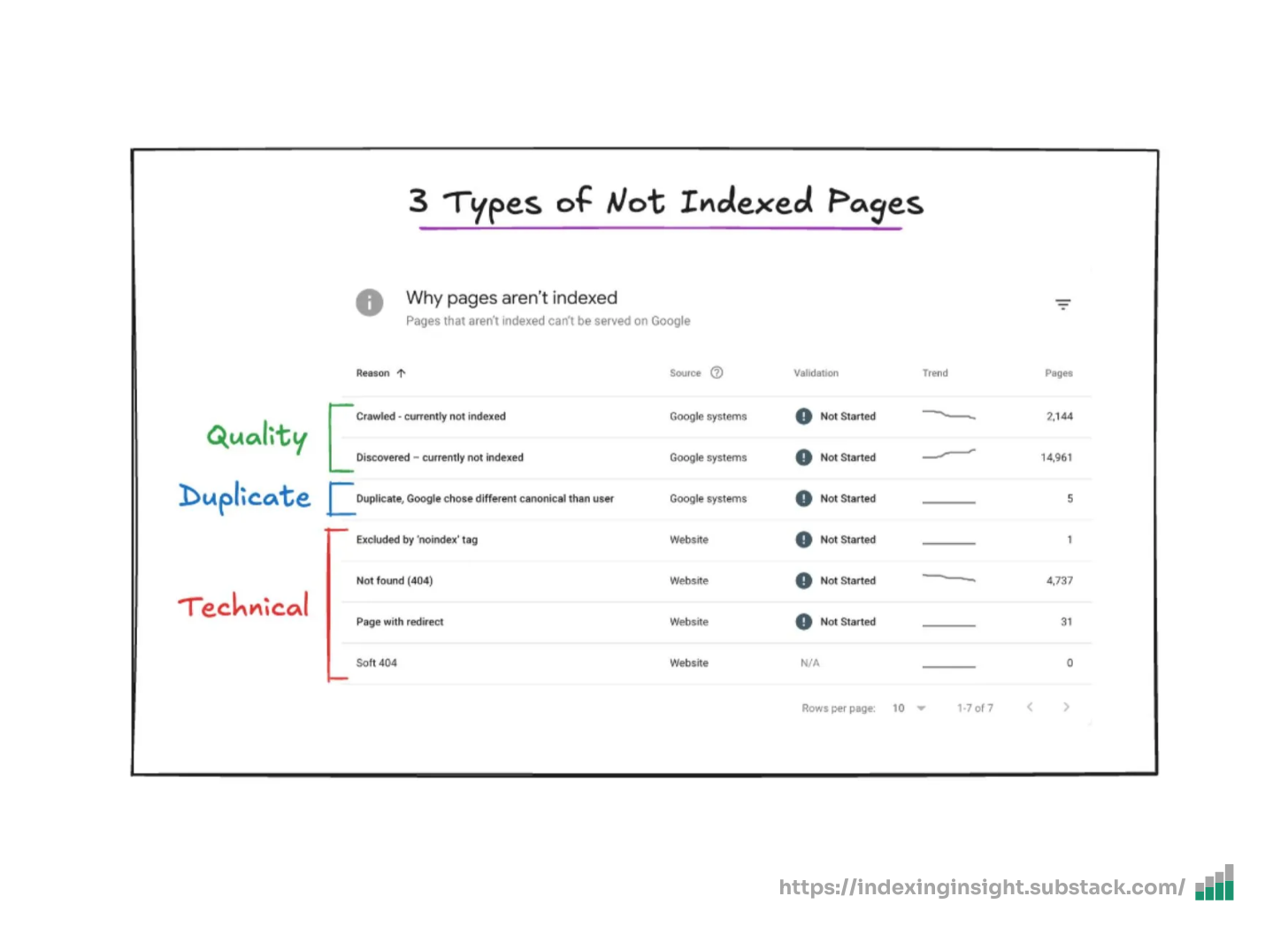

Despite submitting your URLs through XML sitemaps and following best practices, many pages still end up in the dreaded "Not Indexed" category in Google Search Console.

In this newsletter, I'll explain the 3 common types of Not Indexed pages that every SEO professional should know about. And how to identify which category your pages fall into.

So, let's dive in.

The three types of Not Indexed pages are:

1️⃣ Technical: These Not Indexed errors are about pages that either don't meet Google's basic technical requirements or have directives to stop Google from indexing the page.

2️⃣ Duplication: These Not Indexed errors are about pages that trigger Google’s canonicalization algorithm, and a canonical URL is selected from a group of duplicate pages.

3️⃣ Quality: These Not Indexed errors are about pages that are actively being removed from Google’s search results and, over time, forgotten.

Let’s dive into each one and understand them better!

Before we dive in, I want to separate out important and unimportant pages.

When trying to fix indexing issues, you should always separate pages into two types:

🥇 Important pages

😒 Unimportant pages

An important page are pages that you want to:

Appear in search results to help drive traffic and/or sales

Help pass link signals to other important pages (e.g. /blog)

For example, if you’re an ecommerce website, you want your product pages to be crawled, indexed and ranked for relevant keywords.

You also want your /blog listing page to be indexed so it passes PageRank (link signals) to your blog posts. So, these important page types can also appear in search results and drive SEO traffic.

Unimportant pages are pages that you don’t want to:

Appear in search results

Waste Googlebot crawl budget

Help pass link signals to other pages.

This doesn’t mean we just ignore these pages completely. It just means we’re not spending time trying to get these page types indexed in Google.

For example, a lot of ecommerce content management systems (CMS) by default will generate query strings (parameter URLs) which can be crawled and indexed by Google. And we need to properly handle these URLs to help Google not index the pages.

The best way to separate important and unimportant pages is with XML sitemaps.

An XML sitemap that contains your important pages submitted to Google Search Console will allow you to filter the page indexing report by submitted (important) vs. unsubmitted (unimportant) pages.

Right, now let’s dive in!

The first type of error is about the minimum technical requirements to get indexed.

These pages either don't meet Google's basic technical requirements or have directives that explicitly tell Google not to index them:

Server error (5xx)

Redirect error

URL blocked by robots.txt

URL marked ‘noindex’

Soft 404

Blocked due to unauthorized request (401)

Not found (404)

Blocked due to access forbidden (403)

URL blocked due to other 4xx issue

Page with redirect

Google detected that the page does not meet the minimum technical requirements.

For a page to be eligible to be indexed it must meet the following technical requirements:

Googlebot isn't blocked.

The page works, meaning that Google receives an HTTP 200 (success) status code.

The page has indexable content.

If we group the technical errors in Google Search Console, they correspond with one of the minimum requirements:

Googlebot isn't blocked

URL blocked by robots.txt

Blocked due to unauthorized request (401)

Blocked due to access forbidden (403)

URL blocked due to other 4xx issue

Google receives an HTTP 200 (success) status code

Server error (5xx)

Redirect error

Not found (404)

Page with redirect (3xx)

The page has indexable content.

URL marked ‘noindex’

Soft 404

Generally, these types of Not Indexed pages are within your control to fix.

Now that we’ve grouped these errors under specific categories, it can be easier to identify and address them.

If an important page is returning this type of error make sure it can be crawled by Googlebot. An important page can become blocked when:

Robots.txt rule is blocking the page from being crawled

A page has been hidden behind a log-in

A CDN is soft or hard blocking Googlebot

You can test if an important page is blocked using Robots.txt parser tool and read more about how to debug CDNs and crawling.

If an important page is NOT returning a HTTP 200 (success) status code then Googlebot will not index the page.

There are 3 reasons an important page is returning non-200 status code:

The non-200 status is not intentional (and needs to return a 200 status code)

The non-200 status is intentional (and the XML sitemap has not been updated)

The page is returning a 200 status code but Googlebot has not recrawled the page.

If an important page is unintentionally returning a non-200 status code it could because the page was 3xx redirected, returning a 4xx or a 5xx error. You can read more about how different HTTP status code impact Googlebot.

A JavaScript website can also return incorrect status codes for important pages. You can read more about JavaScript SEO best practices and HTTP status codes in Google’s official documentation.

Finally, don’t panic if Google is returning a non-200 HTTP status code error for one of your important pages. Especially if you know the page (or pages) were changed recently.

Sometimes, Googlebot hasn’t crawled the page, or the reports take time to catch up with the changes made to your website.

Check with the Live URL test in the URL inspection tool in Google Search Console.

Finally, if your important pages do not have indexable content it is usually because:

Googlebot discovered a noindex tag on the page.

Googlebot analysed the content and believes it is a soft 404 error.

If an important page has a noindex tag (meta robots or X-robots) then Google will not render or index the page. You can learn more about the noindex tag on Google’s official documentation.

If an important page has a Soft 404 error then this means that Google believes the content should return a 404 error. This usually happens because Google is detecting similar minimal content across multiple pages that make it think the pages should be returning a 404 error.

You can learn more about fixing soft 404 errors in Google’s official documentation.

The second type of not indexed pages relate to duplicate content issues.

These types of errors are to do with Google canonicalization process in the indexing pipeline (I’ve provided descriptions as these are a bit more complicated):

Alternate page with proper canonical tag - The page has indicated that another page is the canonical URL that will appear in search results.

Duplicate without user-selected canonical - Google has detected that this page is a duplicate of another page, that it does not have a user-selected canonical and that they have chosen another page as the canonical URL.

Duplicate, Google chose different canonical than user - Although you have specific another page as the canonical URL, Google has chosen a different page as the canonical URL to appear in search results.

Pages are grouped into this category because of Google’s canonicalization algorithm.

When Google identifies duplicate pages across your website it:

Groups the pages into a cluster.

Analyses the canonical signals around the pages in the cluster.

Selects a canonical URL from the cluster to appear in the search results.

This process is called canonicalization. However, the process isn't static.

Google continuously evaluates the canonical signals to determine which URL should be the canonical URL for the cluster. It looks at:

3xx Redirects

Sitemap inclusion

Canonical tag signals

Internal linking patterns

URL structure preferences

If a page was previously the canonical URL but new signals make Google select another URL in the cluster, then your original page gets removed from search results.

These types of Not Indexed pages are within your control to fix.

There are 3 reasons why your important pages are appearing in these categories:

Important pages don’t have a canonical tag.

Important page’s have been duplicated due to website architecture.

Important page’s canonical signals lack consistency across the website.

If an important page (or pages) does not have a canonical tag, then this can cause Google to choose a canonical URL based on weaker canonical signals.

Always make sure you specific the canonical URL by using canonical tags. For further information you can read how to specify a canonical link in Google’s documentation.

If the signals around an important page aren’t consistent then this can cause Google to pick another URL as the canonical URL in a cluster.

Even if you use a canonical tag.

You need to ensure canonical signals are consistent across your website for the URLs you want to appear in search results. Otherwise, Google can, and will, choose the canonical URL for you. And it might not be the one you prefer.

Google provides documentation on how to fix canonical issues in its official documentation.

The final type of not indexed page relates to quality issues, and these are the most challenging to address.

These types of indexing errors are split into 3 groups based on the signals collects around pages over time:

Crawled - currently not indexed: The page has either been discovered, crawled but not indexed OR the historically indexed page has been actively removed from Google’s search results.

Discovered—currently not indexed: A new page has been discovered but not yet crawled, OR Google is actively forgetting the historically indexed page.

URL is unknown to Google: A page has never been seen by Google OR Google has actively forgotten the historically crawled and indexed pages.

Google is actively removing these pages from its search results and index.

In another article, we discussed how Google might actively manage its index. The article discusses a patent that describes two systems: importance thresholds and soft limits.

The soft limit sets a target for the number of pages to be indexed. And the importance threshold directly influences which URLs get crawled and indexed.

Here's how it works according to the patent when the soft limit is reached:

Pages with an importance rank equal to or greater than the threshold are indexed.

As the threshold dynamically adjusts, URLs' crawl and indexing priority changes.

URLs with an importance rank far below the threshold have zero crawl priority.

This system explains why some pages move from the "Crawled—currently not indexed" to the "URL is Unknown to Google" indexing states in Google Search Console.

It's all about their importance rank relative to the current threshold.

You need to group the two types of important pages within this category to avoid actioning unimportant pages:

✅ Indexable: Live important pages that are indexable but are not indexed.

❌ Not Indexable: Important pages that are not indexable (301, 404, noindex, etc.)

Why do we need to distinguish between these 2-page types in Google Search Console?

Our first-party data has shown that a small % of pages that are not indexable over time can be grouped into these categories.

For example, an ‘Excluded by nonindex tag’ can become ‘Crawled - currently not indexed’ after roughly 6 months. This isn’t a bug but by design.

When trying to figure out why pages are grouped into this category, it’s important to distinguish between what pages are Indexable and Not Indexable.

Important indexable pages that are not indexed will be harder to fix.

Why?

If a page is live, indexable, and meant to be ranked in Google search and is underneath this category, then it means the website has bigger quality problems.

According to Google, they actively forget low-quality pages due to signals picked up over time.

There are 2 types of signals that can influence why Google might forget your important indexable pages:

📄 Page-level signals

🌐 Domain-level signals

The page-level signals can be grouped into three problems:

The indexable pages do not have unique indexable content.

The indexable pages are not linked to from other important pages.

The indexable pages weren’t ranking for queries or driving relevant clicks.

Why these three page-level signals in particular?

Google itself describes the 3 big pillars of ranking are:

📄 Content: The text, metadata and assets used on the page.

🔗 Links: The quality of links and words used in anchor text pointing to a page.

🖱️ Clicks: The user interactions (clicks, swipes, queries) for a page in search results.

In the DOJ trial, Google provided a clear slide highlighting that content (vector embeddings), user interactions (click models) and anchors (PageRank/anchor text) play a key role across all their systems.

A BIG signal used in ranking AND indexing is user interaction data.

The DOJ documents describe how Google uses “user-side data” to determine which pages should be maintained in its index. Also, another DOJ trial document mentions that the user-side data, specifically the query data, determines a document's place in the index.

Query data for specific pages can indicate if a page is kept as “Indexed” or “Not Indexed”.

What does this mean?

This means that for important pages, you must include unique indexable content that matches user intent and build links to the page with verified anchor text. These pillars are essential to help you rank for a set of queries searched for by your customers.

However, user interaction with your page will likely decide whether it remains indexed over time.

When reviewing your important indexable pages that Google is actively removing, look at:

Indexing Eligibility: Check if Googlebot can crawl the page URL and render the content using the Live URL test in the URL inspection tool in Google Search Console.

Content quality: Check if the page or pages you want to rank match the quality and user intent of the target keywords (great article from Keyword Insights on this topic).

Internal links to the page: Check if the pages are linked to from other important pages on the website and you’re using varied anchor text (great article from Kevin Indig on this topic).

User experience: Check if your important pages actually provide a good user experience, load quickly, and answer the user’s question (great article from Seer Interactive on this topic).

However, page-level signals aren’t the only factor at play.

New research by SEO professionals has found that domain-level signals like brand impact a website’s ability to rank, which, as mentioned above, eventually impacts indexing.

The domain-level signals can be grouped into three areas:

The pages are part of a sitewide website quality issue.

The pages are on a website that is not driving any brand clicks.

The pages/website are not linked to other relevant, high-quality websites.

Mark Williams Cook and his team identified an API endpoint exploit that allowed them to manipulate Google’s network requests. This exploit allowed his team to extract metrics for classifying websites and queries in Google Search.

One of the most interesting metrics extracted was Site Quality score.

The Site Quality score is a metric that Google give each subdomain ranking in Google search and is scored from 0 - 1.

One of the most interesting points from Mark’s talk is that when analysing a specific rich result, his team noticed that Google only shows subdomains above a Site Quality score threshold.

For example, his team noticed that sites with a site quality score lower than 0.4 were NOT eligible to appear in rich results. No matter how much you “optimise” the content, you can’t appear in rich results without a site quality greater than 0.4.

What makes up the Site Quality score?

Mark pointed out a Google patent called Site Quality Score (US9031929B1) that outlines 3 metrics that can be used to calculate the Site Quality score.

The 3 metrics that influence Site Quality score:

Brand Volume - How often people search for your site alongside other terms.

Brand Clicks - How often people click on your site when it’s not the top result.

Brand Anchors - How often your brand or site name appears in anchor text across the web.

What if you’re a brand new website?

Mark pointed to a helpful Google patent called Predicting Site Quality (US9767157B2**),** which outlines 2 methods for predicting site quality scores for new website’s.

The 2 methods for predicting Site Quality score for new websites:

Phrase Models: Predicts site quality by analysing the phrases present within a website and comparing them to a model built from previously scored sites.

User Query Data: Using historic click models, predict the site quality score based on how users interact with the particular website.

Is there any data or research that backs up the Site Quality Score?

Interestingly, Tom Capper at Moz studied Google core updates and found that the Help Content Update (HCU) impacted websites with a low brand authority vs domain authority ratio.

This means that core updates impacted sites with a low brand authority more heavily.

Google doesn’t use the brand authority metric from Moz in its ranking algorithms. However, Tom’s study shows a connection between your domain's brand “authority” and a website’s ability to rank in Google Search.

Why does any of this site quality or brand authority matter to indexing?

Let me lay it all out for us to think it through:

Google uses indexable content, anchor text and links to rank pages for queries.

Over time, Google uses “user-side data” (click models/query data) to determine if a page remains in its index at a page level.

Google tracks the site quality score of your subdomain (website), and only sites above a certain threshold (=> 0.4) can appear in features like rich results.

Based on a Google patent, the site quality score is calculated using brand volume, clicks and interactions (as well as predicting scores for new websites).

Based on Moz’s research, The Google Help Content Update (HCU) impacts websites with low brand authority but high backlink authority.

If a website or pages are affected by Google updates (like the HCU), they will not rank for user queries and will have less “user-side data” over time.

The less “user-side data” over time, the greater the chance that Google’s search index will decide to remove the page from search results actively.

Domain-level signals like brand and backlinks help important indexable pages rank in search.

By ranking in search results, your important pages will get “user-side data” (clicks/queries), increasing the chance of your pages remaining in Google’s index.

Domain-level signals drive rankings, impacting whether important pages remain in the index.

If your page-level changes are not improving the indexing status of your important pages, you might need to work on building the website and brand's authority.

It’s why so many enterprise websites suffer from index bloat.

Google is happy to crawl and index lower-quality pages on websites with higher site quality scores (but that’s an issue for another newsletter).

It sucks but the reality is that Google prefers to rank brands over small websites.

There are 3 main types of not indexed pages: technical barriers, duplicate content issues, and quality problems.

Technical barriers and duplicate content issues are generally within your control to fix through standard optimization practices.

Quality issues, however, require deeper analysis and often signal more significant problems with how your content meets user and search engine expectations.

Regularly monitoring your indexation status is crucial to identifying which category your not indexed pages fall into and taking appropriate action.

Indexing Insight helps you monitor Google indexing for large-scale websites. Check out a demo of the tool below 👇.

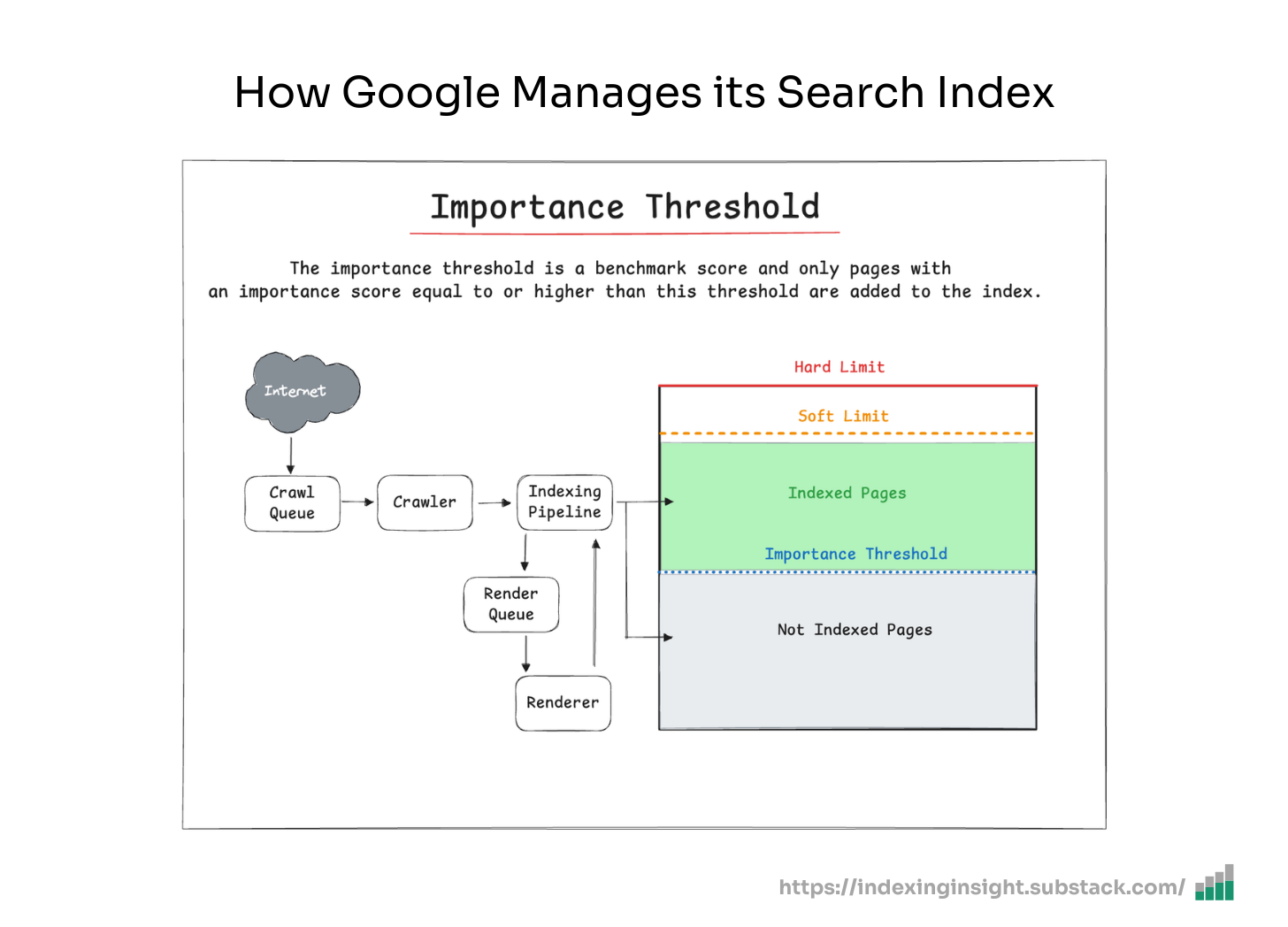

Google actively manages its index by removing low-quality pages.

In this newsletter, I'll explain insights from Google's patent "Managing URLs" (US7509315B1) on how Google might manage its search index.

I'll break down the concepts of importance thresholds, crawl priority, and the deletion process. And how you can use this information to spot quality issues on your website.

So, let's dive in.

⚠️ Before we dive in remember: Just because it’s in a Google patent doesn’t mean that Google engineers are using the exact systems mentioned in US7509315B1.

However, it does help build foundational knowledge on how Informational Retrival (IR) professionals think about managing a massive general search engine’s search index. ⚠️

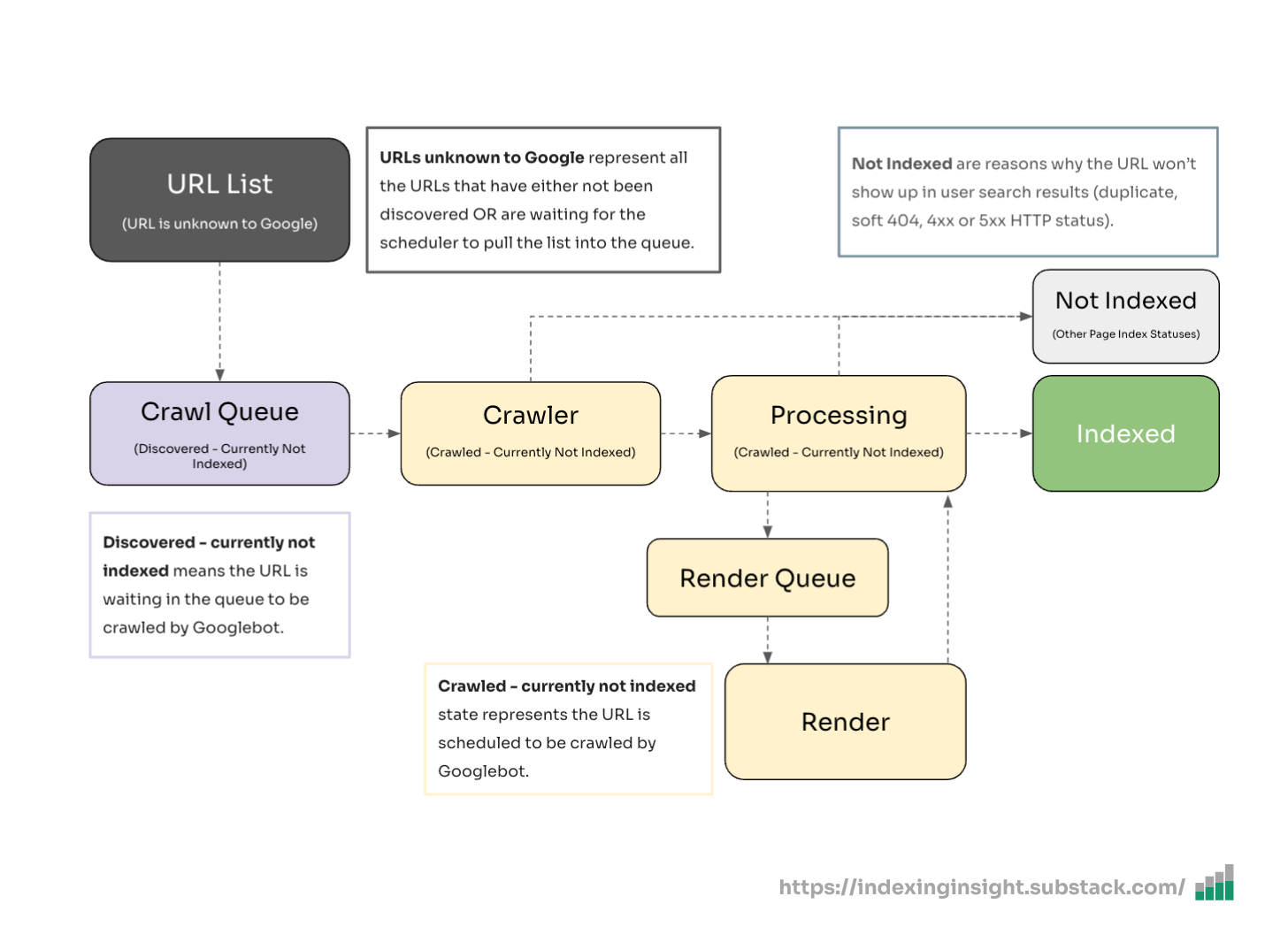

Google’s search index is a series of massive databases that store information.

To quote Google’s official documentation:

“The Google Search index covers hundreds of billions of webpages and is well over 100,000,000 gigabytes in size. It’s like the index in the back of a book - with an entry for every word seen on every webpage we index.” - How Google Search organizes information

When you do a search in Google, the list of websites returned in Google’s search results comes from its search index.

The process of building its search results is done in a 3-step process (source):

🕷️ Crawling: Google uses automated web crawlers to discover and download content.

💽 Indexing: Google analyses the content and stores it in a massive database.

🛎️ Serving: Google serves the stored content found in its search results.

If a website’s page is not indexed, it cannot be served in Google’s search results.

Any SEO professional or company can view the indexing state of their website’s pages in the Page Indexing report in Google Search Console.

Google actively removes pages from its search index.

This isn’t a new concept or idea. Lots of SEO professionals and Googler’s have flagged this over the last decade (but you have to really go looking). A few examples below.

Gary Illyes has mentioned in interviews that Google actively removes pages from its index:

“And in general, also the the general quality of the site, that can matter a lot of how many of these crawled but not indexed, you see in Search Console. If the number of these URLs is very high that could hint at a general quality issues. And I've seen that a lot uh since February, where suddenly we just decided that we are de-indexing a vast amount of URLs on a site just because the perception, or our perception of the site has changed.” - Google Search Confirms Deindexing Vast Amounts Of URLs In February 2024

Martin Splitt put out a video explaining Google actively removes pages from its index:

“The other far more common reason for pages staying in "Discovered-- currently not indexed" is quality, though. When Google Search notices a pattern of low-quality or thin content on pages, they might be removed from the index and might stay in Discovered.” - Help! Google Search isn’t indexing my pages

Indexing Insight first-party data shows that pages are actively removed from the index using our unique ‘Crawled - previously indexed report’:

And our first-party data shows that Google actively forgets URLs that were previously crawled and indexed:

But how does Google decide which pages to remove from it’s index?

A Google patent called "Managing URLs" (US7509315B1) might hold the answer to how a search giant like Google manages its mammoth Search Index.

Any database (like a Search Index) has limits.

According to the Google patent "Managing URLs" (US7509315B1), any search index comes with limits for the number of pages that can be efficiently indexed.

There are two different limits to managing a search engine’s index effectively:

Soft Limit: This limit sets a target for the number of pages to be indexed.

Hard Limit: This limit acts as a ceiling to prevent the index from growing excessively large.

These two limits work together to ensure Google's index remains manageable while prioritizing high-ranking documents.

However, reaching this limit doesn't mean a search engine stops crawling entirely.

Instead, it continues to crawl new pages but only indexes those deemed "important" enough based on query-independent metrics (e.g. PageRank, according to the patent).

This leads us to an interesting concept: the importance threshold.

The importance threshold is a benchmark score mentioned in the Google patent.

It describes that a new page should be indexed after the initial limit has been reached. Only pages with an importance score equal to or higher than this threshold are added to the index.

This ensures that a search engine index prioritizes indexing the most important content.

Based on the patent, there are two main methods for determining the importance threshold:

🔢 Ranking Comparison Method

🏛️ Histogram-Based Method

All known pages are ranked according to their importance.

The threshold is implicitly defined by the importance rank of the lowest-ranking page currently in the index.

For example, if a search engine had 1,000,000 pages indexed. It would rank (sort) the pages based on each calculated importance score. The lowest importance rank the list would be 3.

So the importance threshold in the Search Index would be 3.

The system would use a histogram representing the distribution of importance ranks.

The threshold is calculated by analyzing the histogram and identifying the importance rank corresponding to the desired index size limit.

For example, if a search engine had a limit of 1,000,000 pages. If the histogram shows that 800,000 pages have an importance rank of 6 or higher, the importance threshold would be 6.

The number of indexed pages can fluctuate due to the importance threshold.

This is due to the dynamic nature of both the importance threshold and the importance rankings of individual pages.

You can see this sort of process in action in the Page Indexing report in GSC.

According to the patent, three main factors cause these fluctuations:

🆕 New High-Importance Pages

🚨 Oscillations Near Threshold

📊 Importance Rank Changes

When new pages with importance rank above the current threshold, they're added to the index.

This can cause the total number of pages to exceed the soft limit, triggering an increase in the importance threshold and potentially removing existing pages with lower importance.

Gary Illyes actually confirmed that this process happens in Google’s Search Index.

Poor quality content (lower importance rank) will be actively removed if higher quality content is needs to be added to the index.

Existing pages are removed from the index because they drop below the unimportance threshold.

If an existing page's importance rank drops below the unimportance threshold (due to content updates, link structure changes, or poor user engagement from session logs), it might be deleted from the index, even if it was previously above the importance threshold.

Indexing Insight first-party data has seen indexed pages become not indexed pages in our ‘Crawled - previously indexed’ report.

Gary Illyes confirmed that Google Search’s index tracks signals over time and that these can be used to decide to remove pages from its search results:

“And in general, also the the general quality of the site, that can matter a lot of how many of these crawled but not indexed, you see in Search Console. If the number of these URLs is very high that could hint at a general quality issues. And I've seen that a lot uh since February, where suddenly we just decided that we are de-indexing a vast amount of URLs on a site just because the perception, or our perception of the site has changed.”

- Gary Illyes, Google Search Confirms Deindexing Vast Amounts Of URLs In February 2024

Pages with importance ranks close to the threshold are particularly susceptible to fluctuations.

As the threshold and importance ranks dynamically adjust, these pages might be repeatedly crawled, added to the index, and then deleted.

This creates oscillations in the index size.

The patent describes using a buffer zone to mitigate these oscillations, setting the unimportance threshold slightly lower than the importance threshold for crawling.

This reduces the likelihood of repeatedly crawling and deleting pages near the threshold.

Gary Illyes again confirms that a similar system happens in Google’s Search Index, indicating that pages very close to the quality threshold can fall out of the index.

But it can then be crawled and indexed again (and then fall back out of the index).

The patent also explains why your page’s indexing states can change over time.

At Indexing Insight, we have noticed using our first-party data that indexing state in GSC can indicate the crawl priority of a website.

The Google patent (US7509315B1) explains why this happens using two systems:

🔄 Soft vs Hard Limits

🚦 Importance threshold and crawl priority

There are two different limits to managing a search engine’s index effectively:

Soft Limit: This limit sets a target for the number of pages to be indexed.

Hard Limit: This limit acts as a ceiling to prevent the index from growing excessively large.

These two limits work together to ensure Google's index remains manageable while prioritizing high-ranking documents.

For example, if Google’s Search Index has a soft limit of 1,000,000 URLs, and the system detects they have hit that target, then Google will start to increase the importance threshold.

The result of increasing the importance threshold removes indexed pages that are below the importance threshold.

Which impacts the crawling and indexing of new pages.

The importance threshold directly influences which URLs get crawled and indexed.

Here's how it works according to the patent when the soft limit is reached:

Only pages with an importance rank equal to or greater than the current threshold are crawled and indexed.

As the threshold dynamically adjusts, URLs' crawl and indexing priority changes.

URLs with an importance rank far below the threshold have zero crawl priority.

This system explains why some pages move from the "Crawled—currently not indexed" to the "URL is Unknown to Google" indexing states in Google Search Console.

It's all about their importance rank relative to the current threshold.

The decline in importance rank score over time means that a URL can go from:

⚠️ “Crawled—currently not indexed"

🚧 “Discovered —currently not indexed"

❌ "URL is Unknown to Google"

Gary Illyes from Google confirmed that Google’s Search Index does “forget” URLs over time based on the signals. And that these URLs have zero crawl priority.

Understanding Google's index management can directly impact your SEO success.

Here are 4 tips to action the information in this article:

🚑 Monitor your indexing states

🏆 Focus on quality over quantity

🧐 Identify content that's at risk

🔄 Regularly audit and improve existing content

Check your indexing states on a weekly or monthly basis, especially after core updates.

Pay attention to the following trends:

Increase in pages in the 'Crawled - currently not indexed' report

Increases in pages in the 'Discovered - currently not indexed' report

Pages that are being flagged as 'URL is unknown to Google' in GSC

These shifts can indicate that Google is actively deprioritizing or removing your content from the index based on importance thresholds.

🙋♂️ Note to readers

Google Search Console makes monitoring indexing states difficult.

You can read more about why here:

The importance threshold mechanism shows that Google constantly evaluates page quality relative to the entire web.

This means:

Higher-quality pages push out lower-quality pages from the index

Importance score of your pages continues to worsen if you don’t improve them

Your content isn't just competing against your previous versions but against all new content being created.

This explains why a page that was indexed for years can suddenly get deindexed. Its importance rank may have remained static while the threshold increased.

URLs with frequent indexing state changes (oscillating between indexed and not indexed) are likely near the importance threshold.

These pages should be prioritized to be improved.

For example, if you notice a page was previously indexed but now shows as 'Crawled - currently not indexed', it's likely hovering near the importance threshold.

The patent suggests that Google continually reassesses page importance.

To maintain and improve your indexing, it’s important to:

Perform regular content audits focusing on thin content

Update and improve existing content rather than just creating new pages

Monitor internal links and user engagement metrics as they influence importance.

Google actively removes pages from its index.

In this newsletter, I explained how Google MIGHT use a set of processes to help manage its Search Index using the Google patent (US7509315B1).

The patent sheds some light on how your pages can be actively removed.

The concepts in the patent help explain indexing behaviour they are witnessed by SEO professionals when they use Google Search Console.

Hopefully, this newsletter has given you a deeper understanding of how Google works and what you should be doing to help get your pages indexed.

Indexing Insight helps you monitor Google indexing for large-scale websites with 100,000 to 1 million pages. Check out a demo of the tool below 👇.

After tracking millions of URLs with Indexing Insight, we've uncovered significant gaps in what Google Search Console tells you about your page’s indexing status.

In this newsletter, I'll reveal the top 5 things most SEOs don't know about the Page Indexing report and how these hidden insights could be affecting your SEO analysis.

So, let's dive in.

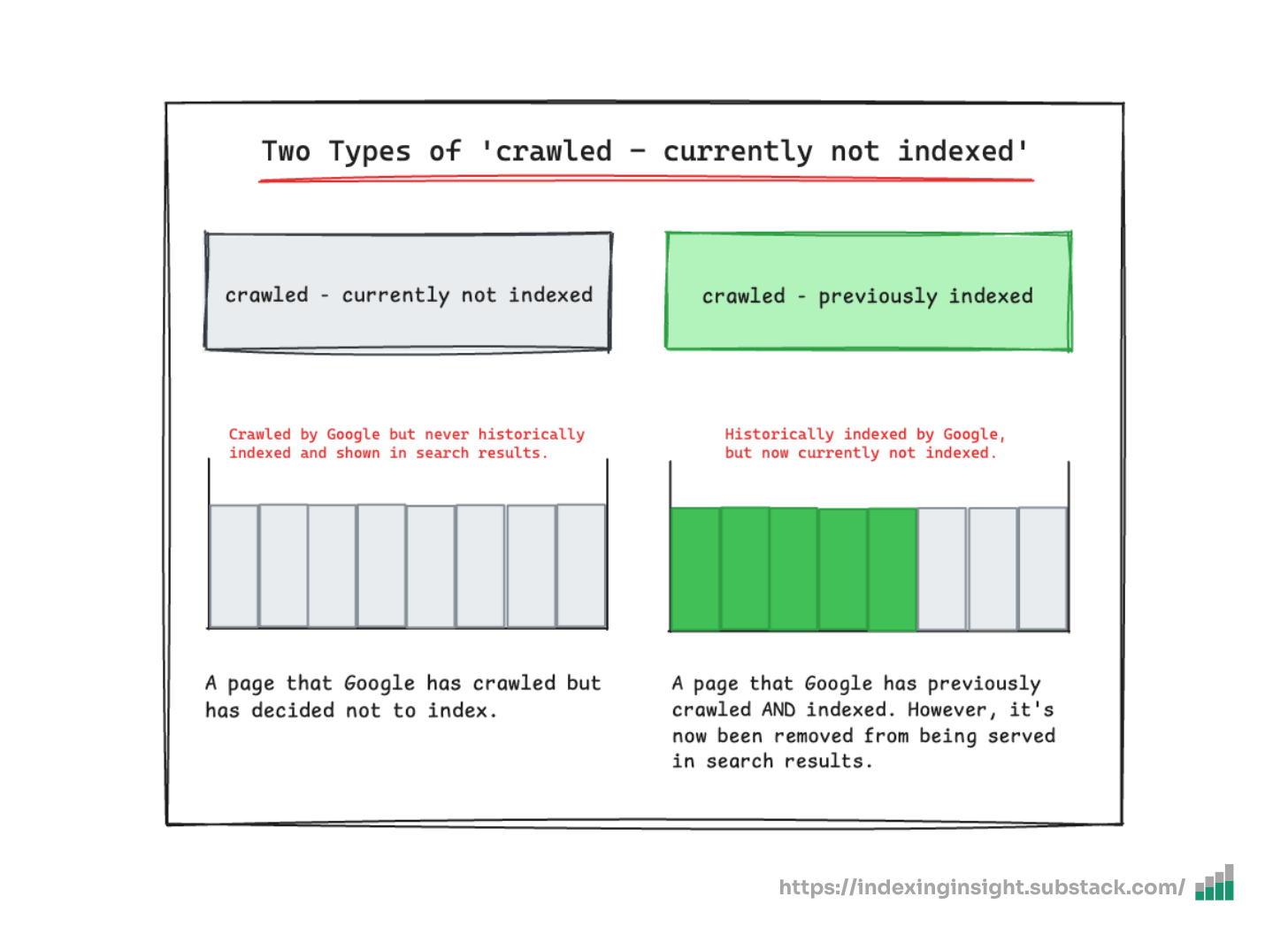

The current definition of 'crawled - currently not indexed' is misleading.

If you search for 'crawled - currently not indexed', most articles define this status as Google having crawled the page but not yet chosen to index it.

This comes directly from Google's documentation:

“The page was crawled by Google but not indexed. It may or may not be indexed in the future; no need to resubmit this URL for crawling”.

Page Indexing report, Google Search Documentation.

However, based on data from Indexing Insight, this definition is incomplete.

Our first-party data shows that 70-80% of pages with the 'crawled—currently not indexed' status in GSC have historically been indexed by Google.

And these pages have been actively removed by Google from its index.

This means when you see this status, you're not looking at pages waiting to be indexed. In fact, you're looking at pages Google has actively removed from its search results.

For one client alone, we found nearly 130,000 pages with what should be called 'crawled - previously indexed' status. That's 13% of their monitored pages that Google has actively removed from serving in search results.

This data helped the client understand they needed to take action. Not waiting for indexing.

Learn more by reading What is ‘Crawled - Currently Not Indexed’?.

The coverage state 'URL is unknown to Google' is even more misleading than you might think.

Google's official documentation states:

"If the label is URL is unknown to Google, it means that Google hasn't seen that URL before, so you should request that the page be indexed. Indexing typically takes a few days."

URL Inspection Tool, Search Console Help

But our data tells a different story. Many pages labeled as 'URL is unknown to Google' have actually been crawled and indexed before.

Gary Illyes from Google confirmed this phenomenon on LinkedIn, explaining that Google's systems can "forget" URLs as they purge low-value pages from their index over time.

The signals Google collects about a page can eventually lead to it being forgotten entirely.

For one website monitoring 1 million URLs, 16% of URLs were labelled 'URL is unknown to Google' and many of these had search performance data proving they were previously indexed.

Based on this first-party data, ‘URL is unknown to Google’ should be split into two different definitions:

URL is unknown to Google: The URL has never been discovered or crawled by Googlebot.

URL is forgotten by Google: The URL was previously crawled and indexed by Google but has been forgotten.

This distinction is crucial for understanding the true health of your website in Google's index.

Learn more by reading What is ‘URL is unknown to Google’?.

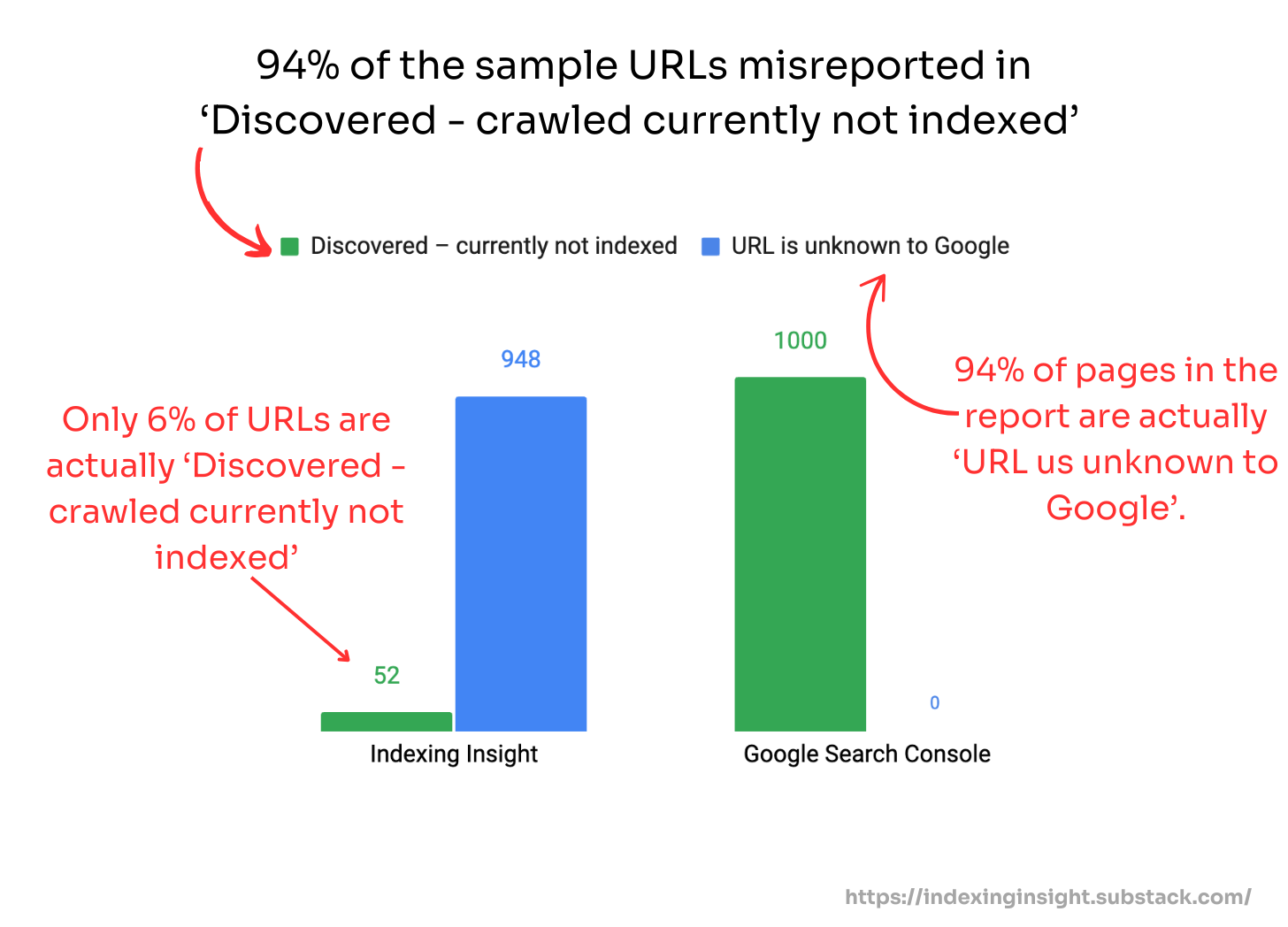

When Google actively deprioritizes your pages, GSC doesn't tell you the whole truth.

Our analysis found that 94% of pages that should be labeled 'URL is unknown to Google' are instead grouped under the 'Discovered - currently not indexed' report in GSC.

This misreporting isn't a bug. It's by design.

When you inspect these URLs individually using the URL Inspection tool, they show the 'URL is unknown to Google' status, contradicting what the Page Indexing report shows.

Why does this matter?

Because pages with 'URL is unknown to Google' status have zero crawl priority in Google's system, according to Gary Illyes.

When you can't see which pages have this status, you can't take appropriate action to address the underlying quality issues.

By grouping these URLs under 'Discovered - currently not indexed', GSC leads you to believe you have a discovery problem when you actually have a quality problem that's so severe Google has chosen to forget your content entirely.

Learn more by reading How Google Search Console misreports ‘URL is unknown to Google’.

The various index states in GSC aren't just status labels, they're indicators of how Google prioritizes crawling your site.

Based on data from Indexing Insight and confirmation from Google's Martin Splitt, we can map the index coverage states to Googlebot's crawl, render, and index process:

'Submitted and indexed': Higher priority, actively shown in search results

'Crawled - currently not indexed': Medium priority, stored but not served

'Discovered - currently not indexed': Low priority, on the crawl list but deprioritized

'URL is unknown to Google': Zero priority, completely forgotten

Pages can move backwards through these states over time.

This reverse progression through index states often accelerates after Google core updates, indicating system-wide reprioritization of what's worth crawling and indexing.

When a page moves from 'Submitted and indexed' to 'URL is unknown to Google,' it shows that Google's systems have determined that it has such a low value that it's not worth remembering.

Learn more by reading How Indexing States Indicate Crawl Priority and How Google Core Updates Impact Indexing.

When you see conflicting data between the URL Inspection tool and the Page Indexing report, you should always trust the URL Inspection tool.

Here's why:

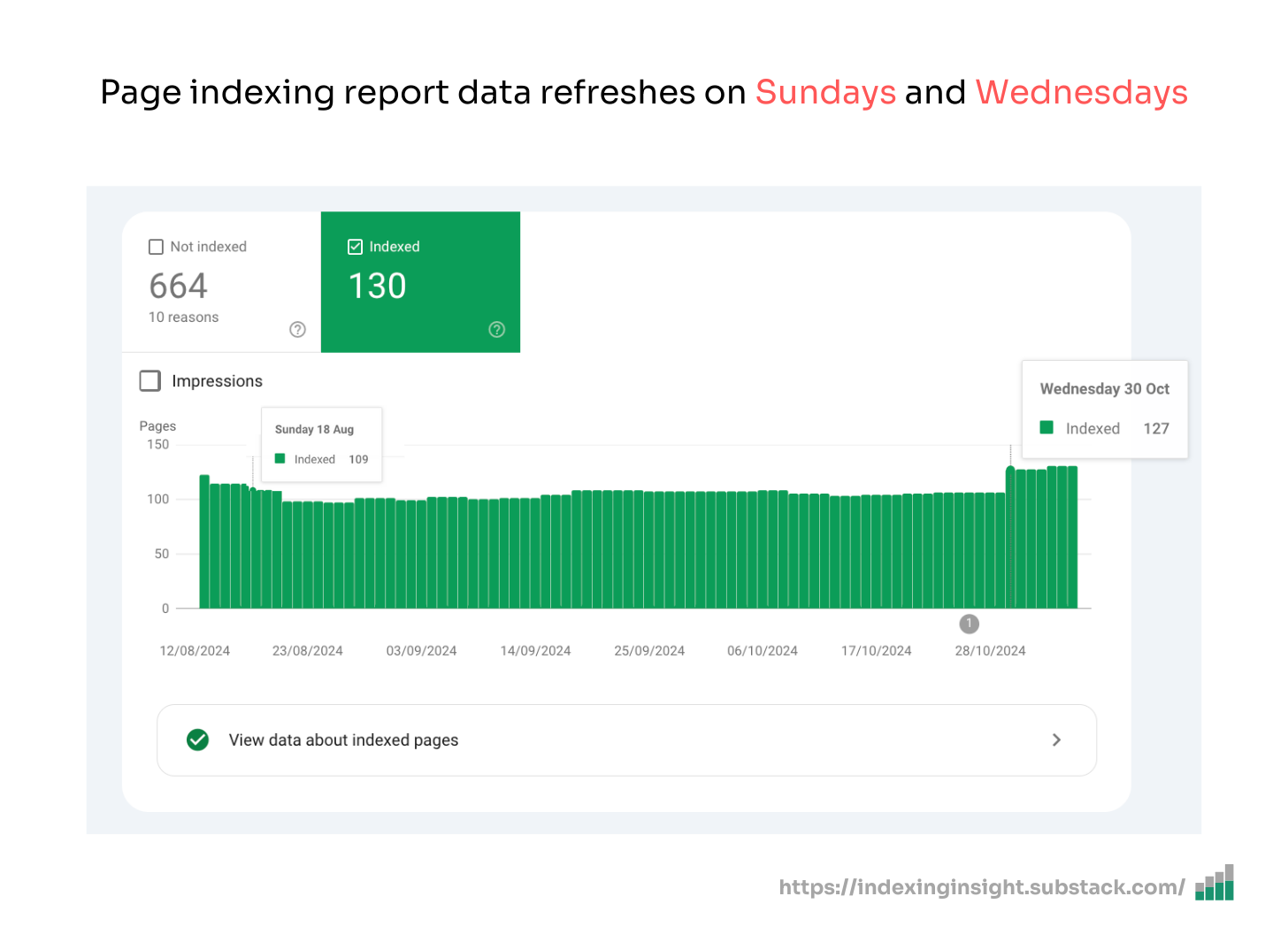

Page Indexing report updates only twice a week (Sundays and Wednesdays)

The URL Inspection tool pulls live information directly from Google's index

The Google Search Central team has confirmed that the URL Inspection Tool is the most authoritative source for indexing data and should be considered the source of truth when conflicts arise.

This means that for truly accurate indexing analysis, you need to inspect URLs individually or use the URL Inspection API, which is what Indexing Insight does to provide daily monitoring.

Learn more by reading URL Inspection Report vs Page Indexing Report: What's the difference?.

It completely changes how you should approach SEO analysis:

When you see 'crawled - currently not indexed', don't assume these pages are waiting to be indexed. Most likely, Google has actively removed them from the index.

After Google Core updates, check both your rankings AND your indexing status. Core updates don't just affect rankings — they actively reprioritize what's worth crawling and indexing.

Look beyond the surface-level Page Indexing report. The most important insights come from tracking coverage state changes over time, which GSC doesn't show.

Always verify with the URL Inspection tool for important pages with indexing issues rather than trusting the Page Indexing report.

If you find many important pages with 'URL is unknown to Google' or 'Discovered—currently not indexed' status, this indicates severe quality issues that need to be addressed before Google will consider re-indexing them.

These nuances become even more critical for sites with 100,000+ pages as the scale makes manual analysis through GSC virtually impossible.

The Page Indexing report in Google Search Console doesn't tell the full story about your website's indexing health.

By understanding the true meaning behind coverage states, the misreporting of 'URL is unknown to Google', and how index states indicate crawl priority, you can develop a more accurate picture of how Google views your content.

Importantly, when Google core updates roll out, they don't just impact rankings.

They actively cause Google's systems to reprioritize what is worth crawling and indexing, potentially removing large numbers of pages from the index entirely.

Hopefully, this newsletter has inspired you to examine your page indexing report with a new perspective and identify which pages are truly at risk in Google's indexing system.